Marie Haynes. March 27, 2024.

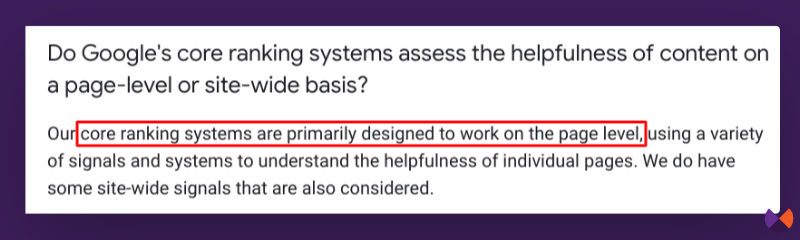

The helpful content system has changed. As of March 2024, it is no longer its own separate system, but now, helpfulness is determined primarily on the page level by Google's core systems. I spent some time reviewing Google's previous wording to help us understand this significance of what has changed.

There is no longer a single helpful content system

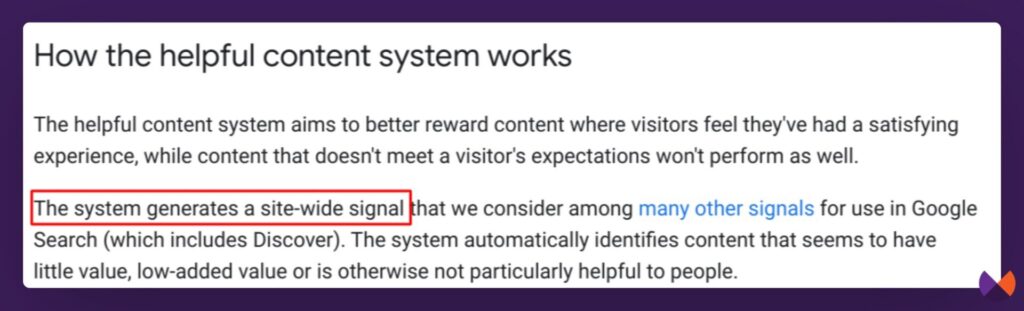

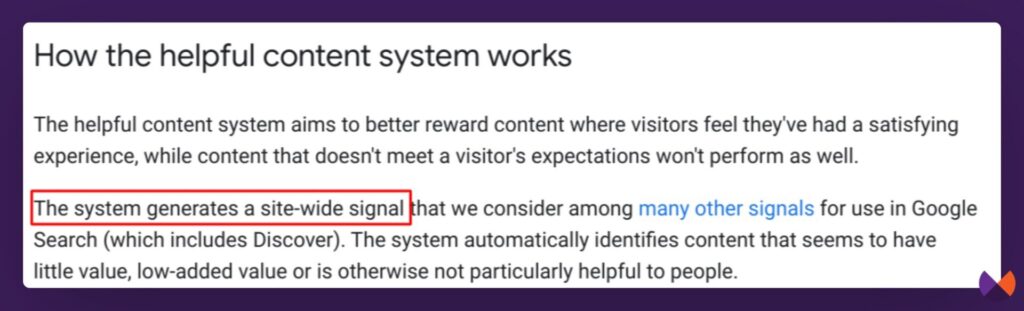

From August 2022 until March of 2024, the helpful content system was its own separate system that worked in conjunction with Google’s algorithms.

It produced a sitewide signal that would automatically identify content that seemed to have little value, added value, or wasn’t particularly helpful to people.

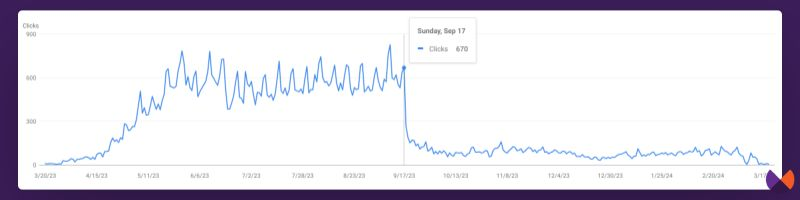

If you are a victim of the September 2023 helpful content update, it is quite easy to see the effects of the previously used site-wide signal. If you have a sharp, obvious decline in organic traffic that starts between September 14-18, this is very likely to be the effects of this signal.

Many sites were impacted like this and I have yet to see any recover meaningfully.

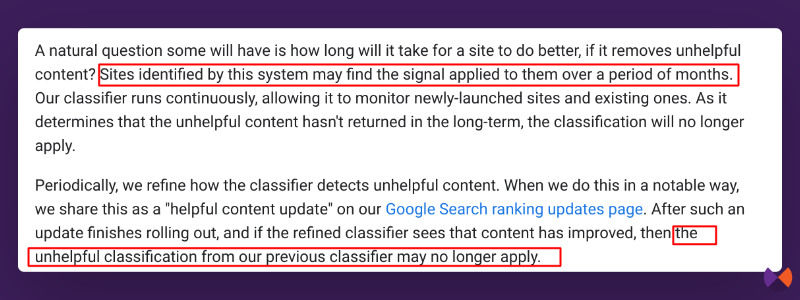

Previously, the helpful content system would apply a signal to a site for a number of months and could theoretically be removed if the helpful content classifier saw that content overall had improved.

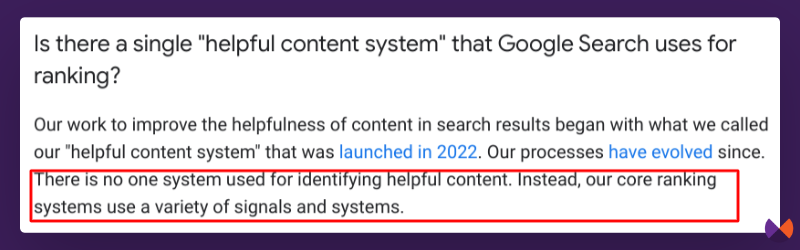

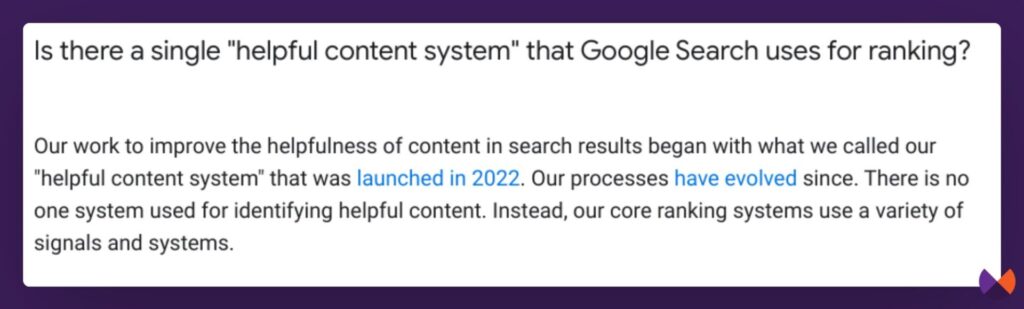

Google now says that their process for improving the helpfulness of search results has evolved since launching the helpful content system in 2022.

There is now no longer one system that is used to identify helpful content. Instead, the core ranking systems do this by using a variety of signals and systems.

I’ll come back to these signals and systems in a moment.

First, let’s talk about this next addition which I believe to be a significant change.

Google’s systems now assess the helpfulness of content on a page-level

Again, previously:

And now, while site-wide signals are considered helpfulness is no longer assessed on a site-wide basis, but on a page level.

What does it mean when Google says they use a variety of signals and systems to identify helpful content?

Google search has evolved tremendously over the last decade. It is worth taking time to digest this 2022 blog post by Google’s Pandu Nayak (former head of search and now the Chief Search Scientist at Google) called How AI powers great search results.)

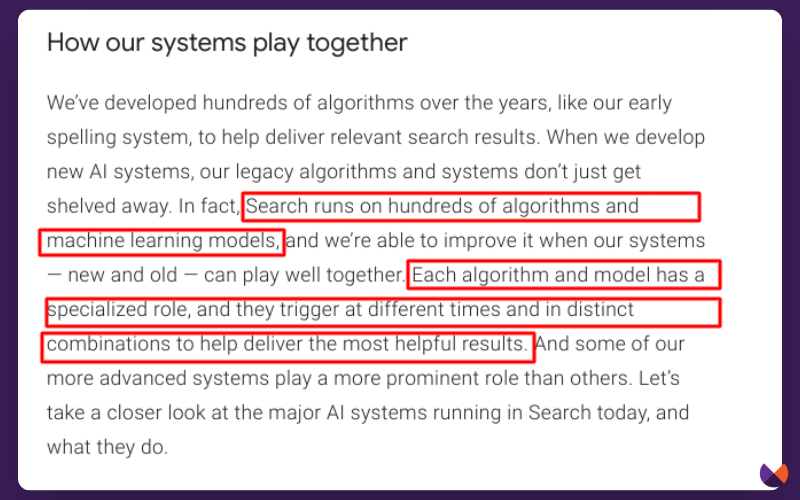

Today’s search runs on hundreds of algorithms and machine learning models.

If you have not read Google’s documentation on How Search Works in a while, I’d recommend setting aside time to digest it again. When I first read this document I had no concept of how machine learning systems worked.

Now Search seems so simple to me. It is learning to recognize and reward pages that people like and find useful.

1) Google uses signals from real searches to help their algorithms continually improve on returning results that users find relevant.

How would Google know what users are finding relevant? They use the signals gathered from every single search. They use signals like clicks, reads, scrolls, mouse hovers and more. They’ve spent years learning how to best use those signals in machine learning systems to understand whether the algo has done a good job at returning content the searcher found relevant.

How does Google know if the machine learning systems are doing well at adjusting rankings? The quality raters rate the results that are produced. And when results are ranking that are not ideal, their feedback helps improve the systems further.

Ultimately though, much of what determines rankings is determined by Google predicting which results are likely to satisfy searchers - based on signals that indicate a search was satisfying.

2) Those results are prioritized by using signals to help identify (E-)E-A-T.

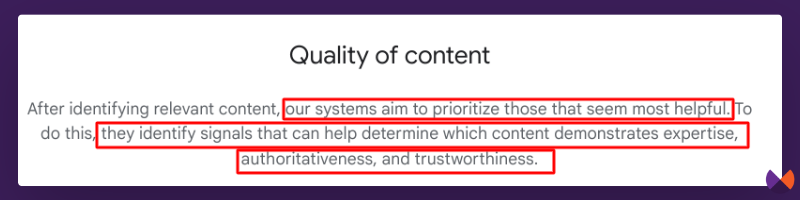

Here is what they say about content quality.

The systems prioritize content that seems helpful. They do this by identifying signals that can help determine which content demonstrates expertise, authoritativeness, and trustworthiness.

Links are the most obvious of the signals Google has used over the years. Links are the labels that helped Google learn to understand which content on the web is authentic and trustworthy. They most likely still do. But, it makes sense to me that Google is continually learning to use the other signals that are available in the world beyond links.

We have seen once again that with this core update Google is elevating sites that are authoritative, recognizable brands, or often now, real-world businesses with real customers who give them real money. This makes sense as these businesses will produce many signals that can be used to put together a conceptual picture that tells us that yes, they have the appropriate E-E-A-T searchers would expect for this type of query.

My thoughts on E-E-A-T have evolved over the years. I see E-E-A-T as one of the most important things when it comes to ranking, yet not a specific ranking factor. It is the concept that the world generally has of you or your business. It is the template by which Google rates sites for every single query. It is the template by which the world rates your authority within your topic realm.

There’s clearly more to search than just user preference and E-E-A-T. There are benefits for many sites who work on improving technical aspects to help improve how sites perform for their users, or to help Google better crawl and understand content.

Ultimately though, search uses a variety of signals - user engagement signals, user satisfaction signals, signals that indicate others online consider your content valuable, and real-world signals that can point to legitimacy - to help show searchers content they are likely to find helpful.

Stop doing things for Google

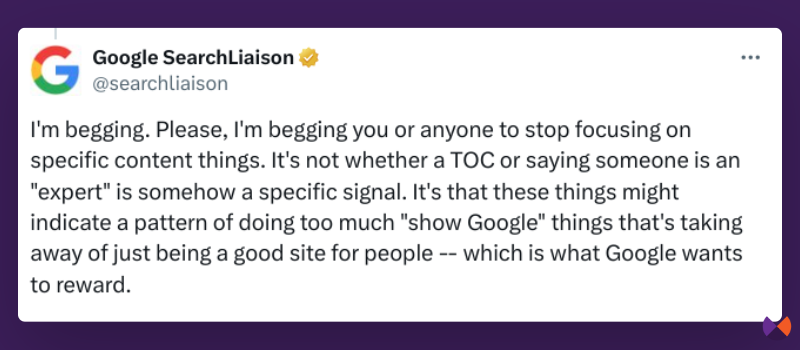

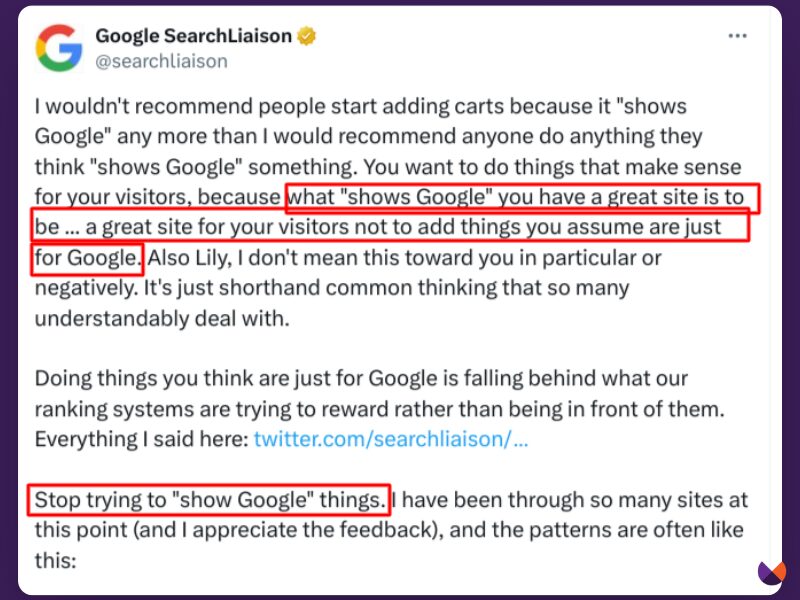

If I can convince you that the above paragraph is true, then Danny Sullivan’s recent Twitter advice to stop doing things for Google makes much more sense.

“What ‘shows Google’ you have a great site is to just be…a great site for your visitors.”

I know that sounds trite.

It’s frustrating for us to hear this kind of advice as SEO’s because we are used to having specific advice to follow on how to craft content for SEO purposes. And also, much of the content that is still ranking is not obviously helpful. Google says that since August 2022 their efforts have reduced unhelpful content by 40%. That means there is more work still to be done!

It’s also frustrating because for many of you impacted by the previous September helpful content update or this chaotic March core and spam updates, there is so little guidance from Google on what to do other than the age old, “create great content!”

And of course their guidance on what it is that users tend to find helpful and reliable content.

My recommendations

I'll be continuing to study the effects of the March core/spam updates. I believe we have entered into a new era of search where Google is getting even better at recognizing and rewarding helpful content.

I soon have a book out that will help you understand more about how AI has changed Google's search results and be much more specific about what it is that Google's systems are rewarding. Also, the accompanying course (was previously a workbook - now a full course) should launch soon.

Here's of what I've written on Google's rewarding of helpful content.

My newsletter, Search News You Can use - I study everything I can about how search works and share it weekly.

Comments

Keep up your great work Marie… things are changing at such a fast pace, SEO’s need to assess good research to know we are on the right track 🙂

Makes sense to me for it to be switched to page-level.

We’ve all known for years that it’s pages that rank rather then websites.

Great insight to how helpful content is changing with Google.

Google’s HCU document seems to marginalize E-E-A and specifically states the most important factor is trust. By focusing on producing content for people first we create trust. Of course E-E-and A play a part in creating trust. I also saw a post somewhere that said Google (I believe it was from Mueller) was lessening emphasis on links in the latest updates. Switching to nearly all AI with their PPC offering and the fact they pared back their contracts with Quality Raters I feel like they are putting more into their automated systems for content assessment. I am trying not to state absolutes here as nothing is absolute – but what do you make of these observations?