After a long wait of almost eight months, the Penguin algorithm finally updated. If your site was affected by Penguin back on April 24, 2012 May 25, 2012 or October 5, 2012 and you have been working hard at cleaning up your link profile, then you were probably pretty excited to check the SERPS after the release of Penguin 2.0 was announced. But how many of you liked what you saw? My guess is that not too many of you were happy.

It has now been two weeks and I have not seen a single credible case of a site that recovered with this update. I personally reported some Penguin “recoveries”, but used quotes around the word “recoveries” because what was seen was not really a recovery but rather an increase in impressions reported in WMT. These impressions were not significant enough to translate to an increase in traffic for any of these sites.

I asked John Mueller

I asked the following question in a John Mueller Webmaster Central Hangout:

We have seen very few credible cases of Recovery from Penguin 2.0. Is it truly possible to recover? Do links need to be actually removed or is disavowing them enough? If a site cleans up their links and earns new links can they truly recover?

(Skip to the 11 min mark)

Here is his reply:

Penguin 2.0 is an update of our algorithm that we just released, I think, a couple of weeks ago, so that’s something where, specifically for that algorithm you’d need to give it more time. But it’s definitely possible to recover. We do see that on our side that sites pop back up again after cleaning things up. We usually recommend cleaning the links up directly as much as that is possible, so actually removing those links, but putting them in the disavow file is also possible. Doing that at the same time is also a possibility depending on your workflow. So, some people put them in their disavow file and then try to contact them. That’s also definitely possibly. If you clean those links up and you really work on creating new links then generally you can see a recovery there.

John went on to explain that if all you have is bad links then you will not see a recovery. It is important to create good content that people will want to link to naturally. He said,

Sometimes we see people say that instead of focusing on the old links I’ll just focus on creating new or better links and I think to some extent that’s something you could do but personally I really like to recommend actually cleaning up the old issues first so that you don’t have this kind of anchor pulling you down when you’re working forward. If you can really clean up these old issues and make sure that they’re not causing any problems any more then any work that you put into creating better content that people want to recommend, you’ll be able to gain a much better foothold than if you are stuck with this old algorithmic problem that you’re not really resolving.

Later on in the hangout, he made the following statements:

One of the issues with the sitewide algorithms like Penguin and Panda is that it just takes a while to get all of this new updated data. We have to recrawl the web. We have to recrawl all of these links that are associated with your website and that’s something that can take weeks and months and even up to a year or so. Even when all of this data is updated from a techincal point of view we have to first crawl all of these pages again to recognize that these links are gone and then update them in our index which can also take a bit of time and then at that point running the algorithm again to update the data is something that we’ll be able to use that new situation on the web. But, it just takes a lot of time to actually go through this process. So, if you’re seeing issues with regards to algorithms like that I really recommend cleaning everything up as much as possible so that you’re not stuck in the situation of doing an incremental update, of maybe updating 10 or 20% of the problems that you’re seeing there and giving it a half a year of time to update the algorithm again because that turn around time is just so high. So, really, taking the hint there and trying to resolve your problems completely and making sure that your website is significantly better is generally the right way to move forward here.

Interpretations

There are several things that stood out to me from this hangout and this is why I wrote this blog post rather than just tweeting the nuggets like I have been doing. (You can follow me on Twitter at: https://twitter.com/Marie_Haynes)

Takeaway #1

Does Penguin recovery happen immediately with a refresh or is it a gradual recovery as links get crawled?

This statement from John had me intrigued. When I asked why we hadn’t seen many cases of recovery from Penguin 2.0, he said

Penguin 2.0 is an update of our algorithm that we just released, I think, a couple of weeks ago, so that’s something where, specifically for that algorithm you’d need to give it more time.

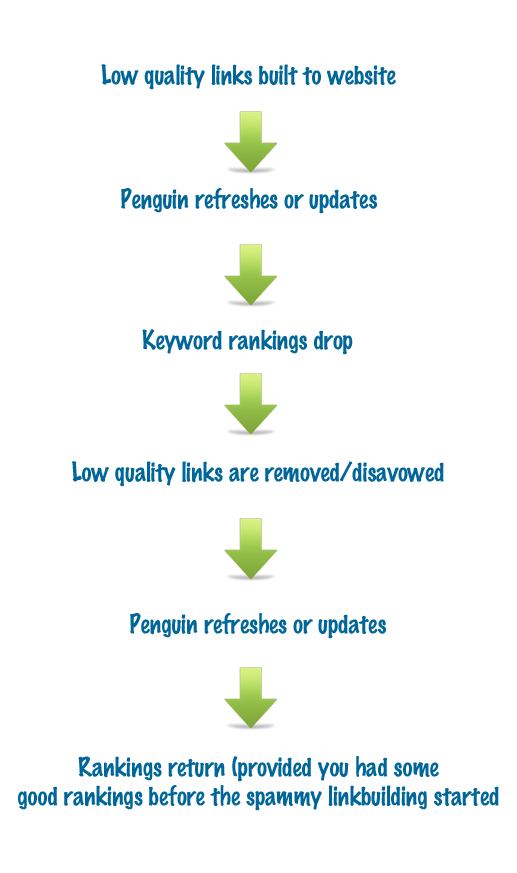

I’m confused by this statement! I thought that if a site had done the work to recover from Penguin then it would happen on the day of a Penguin refresh. This is my understanding of how Penguin recovery would work:

The way I understood Penguin, if you had done the work to disavow or remove the links that were causing the problem, then you would get the Penguin flag lifted off of your site as soon as the algorithm refreshed or updated and, provided your site had some good natural links, you would see recovery. I would expect that recovery to be almost instant.

The way that John is describing this it sounds like it is possible that when Penguin refreshes, then from that moment on Google assesses links pointing to your site as they crawl them. As such, Penguin recovery would take some time because it may take several weeks or even months for all of your problematic links to be crawled. This may fall into line with Tim Grice’s thoughts in conversation with Cyrus Shepard) as he wondered if perhaps the disavow tool was not processed until either a reconsideration request was filed (if you had a manual penalty), or until Penguin refreshed:

@cyrusshepard looks like you need to activate the file through a recon or wait for an update

— Tim Grice (@Tim_Grice) May 30, 2013

Google has said many times in hangouts and elsewhere that the way the disavow file works is that you file it and then as Google crawls the web, an invisible nofollow tag is added to the links in the file (For reference see http://www.youtube.com/watch?v=7NNf_AhA1gw&feature=youtu.be&t=19m15s). As far as I am aware, they have never mentioned that the tool would not start working until a Penguin refresh. I don’t believe that Google would mislead us and tell us that the disavow starts working right away when in reality it doesn’t start working until there is a reconsideration request or a Penguin refresh, but is it possible that there is a bug in the tool that causes this to happen? It seems unlikely to me, but it’s possible.

Takeaway #2

Can you escape Penguin by just using the disavow tool, or do you need to actually remove links?

John is really vague in his answers when someone asks if using the disavow tool is enough. To me it sounds like it really should be enough, but because Google really wants to see as much webspam removed from the web as possible, they are encouraging sites to actively remove links as opposed to just disavowing them.

However, completely removing links (as opposed to just disavowing) seems to have been the key to some of the few cases of Penguin recovery that I have seen. One example is the WPMU recovery. These few recovery cases were usually on sites where there were footer links in clients’ websites, and therefore, under the site owner’s control. Once these footer links were nofollowed or the anchor text was changed to something more natural such as the site’s url, then recovery could happen with the next Penguin refresh.

I wonder if it is only possible to recover from Penguin if you are able to physically remove a hugh percentage of links? In my experiences with link removal for manual unnatural links penalties, I am usually only able to get 15-20% of links removed. Getting 100% would be a monumental task if you did not control the sites linking to you. Is it possible that sites that did not recover from Penguin failed to do so because they simply relied on the disavow tool and did not actually remove links?

Takeaway #3

You need some GOOD links!

I believe that many sites have not recovered from Penguin because they don’t have good, naturally earned links to support the site’s rankings. Or, in many cases, even if there are naturally earned links, they are not likely to be links containing keywords as anchor text which means that they are not going to directly support your rankings for that keyword. At first when I saw that there were very few cases of Penguin recovery reported after Penguin 2.0, I thought that this may be one of the main reasons that sites did not recover. Many sites that previously relied on low quality links in order to rank probably did not have the know-how to get good, natural links. But, I have since heard several stories of sites that were ranking relatively well, hired a cheap SEO to try to improve their rankings even more, got affected by Penguin, disavowed the poor quality links made by their SEO and did not even come close to regaining their rankings they had prior to hiring their SEO.

Conclusions

I can’t tell you how frustrated I am that we are not seeing Penguin recoveries. I am contacted several times a day by business owners who are desperate to recover from the work that a low quality SEO company did on their site. They are coming to me for link audits because the majority of the Penguin recovery advice out there says to find your bad links and remove or disavow them and then you will recover. But, it seems that it is not that simple. At this point I am refusing Penguin link audit work because I do not think it is fair to charge for an audit when removing and disavowing links has not helped any websites to recover.

Added November, 2015: I recently had a potential client ask me about this blog post where I had stated that I am refusing work. In the last two years I have seen a good number of happy Penguin recoveries, and YES, I am doing link audits and disavow work. You can read more here about my Penguin recovery services and also some of my recovery success stories.

What are your thoughts? Do you think it is possible to recover from Penguin?

Comments

I love Google. They talk about transparency and they are as transparent as a granite wall. I believe more of what comes out Mahmoud Ahmadinejad mouth than Matt Cutts

I love Google. They talk about transparency and they are as transparent as a granite wall. I believe more of what comes out Mahmoud Ahmadinejad mouth than Matt Cutts

Excellent analysis again Marie, I agree entirely with your conclusion in takeaway #3 – sites with good links that acquired some low quality links are still having trouble recovering. Seems from What John Mu was saying it is just gonna take ages to recover, and thus all penguin recoveries will be painfully slow. I also thought this quote was quite interesting….

“We have to recrawl all of these links that are associated with your website and that’s something that can take weeks and months and even up to a year or so”

A year to crawl a link, seriously?

Painful, isn’t it? If the lack of Penguin recoveries seen is because of the time that it takes to recrawl links then I would expect that we should see some sites claiming recovery in the next few months….hopefully!

Excellent analysis again Marie, I agree entirely with your conclusion in takeaway #3 – sites with good links that acquired some low quality links are still having trouble recovering. Seems from What John Mu was saying it is just gonna take ages to recover, and thus all penguin recoveries will be painfully slow. I also thought this quote was quite interesting….

“We have to recrawl all of these links that are associated with your website and that’s something that can take weeks and months and even up to a year or so”

A year to crawl a link, seriously?

Painful, isn’t it? If the lack of Penguin recoveries seen is because of the time that it takes to recrawl links then I would expect that we should see some sites claiming recovery in the next few months….hopefully!

Excellent Article Marie – As John Muller said it can even take a year to crawl those pages to get those links removed from the webmasters tools link profile. Wouldn’t it be a nice idea to submit all the urls,(where the links have been removed) to Google Index to crawl those pages again, So That G crawls it faster than expected

I had thought about that too. I was wondering if it would be helpful to somehow ping your unnatural links so that they get recrawled quicker.

I did submitted the links which were removed to Google Submit URL, as a test, would see what would happen in few days. When the links get update or crawled faster in WMT i will post it here..

The problem is that it will take a year to understand if it worked or not. The question remains if for small business it wouldn’t be simpler to start over… or as someone mentioned once (I think Marie in Moz) a reset button

Excellent Article Marie – As John Muller said it can even take a year to crawl those pages to get those links removed from the webmasters tools link profile. Wouldn’t it be a nice idea to submit all the urls,(where the links have been removed) to Google Index to crawl those pages again, So That G crawls it faster than expected

I had thought about that too. I was wondering if it would be helpful to somehow ping your unnatural links so that they get recrawled quicker.

I did submitted the links which were removed to Google Submit URL, as a test, would see what would happen in few days. When the links get update or crawled faster in WMT i will post it here..

The problem is that it will take a year to understand if it worked or not. The question remains if for small business it wouldn’t be simpler to start over… or as someone mentioned once (I think Marie in Moz) a reset button

For me the main problem is the lack of transparency by Google. For the x number of thousands of websites hit that haven’t recovered, those that have removed backlinks and had their manual penalty removed – but are still tanked in the rankings. Why doesn’t Google just be honest and save everyone a lot of time and effort and say EXACTLY why websites are not recovering. The frustrating thing for me is that I see many websites ranked fairly high in Google that have poor quality link profiles, and manipulated link profiles, and yet they still rank well. For my part, I had a website that was hit by Manual Penalty in April 2012 (I had used Disavow as well as link removal) and that penalty was revoked in December 2012. I saw rankings for main keywords come back within 2 weeks BUT… May 2nd 2013 rankings dropped COMPLETELY for main keywords… although the website is still ranking for low traffic long-tail keywords. I have no idea why. I am really not sure if the Disavow file works… my gut feeling is that there is a type of ‘sandbox’ either for websites or website registrants…. I just wish Google would be open and say what it is.

True i agree to it. Recovery process should be fast only then there can be hope!

For me the main problem is the lack of transparency by Google. For the x number of thousands of websites hit that haven’t recovered, those that have removed backlinks and had their manual penalty removed – but are still tanked in the rankings. Why doesn’t Google just be honest and save everyone a lot of time and effort and say EXACTLY why websites are not recovering. The frustrating thing for me is that I see many websites ranked fairly high in Google that have poor quality link profiles, and manipulated link profiles, and yet they still rank well. For my part, I had a website that was hit by Manual Penalty in April 2012 (I had used Disavow as well as link removal) and that penalty was revoked in December 2012. I saw rankings for main keywords come back within 2 weeks BUT… May 2nd 2013 rankings dropped COMPLETELY for main keywords… although the website is still ranking for low traffic long-tail keywords. I have no idea why. I am really not sure if the Disavow file works… my gut feeling is that there is a type of ‘sandbox’ either for websites or website registrants…. I just wish Google would be open and say what it is.

True i agree to it. Recovery process should be fast only then there can be hope!

I think so. Not much of us are happy after the Penguin releases. But I think Google just did a good job, this means that they are on our side guiding us in the right path over the internet marketing. Question: Why is it that some sites are still ranking on the first page of Google after they hit by Panda and Penguin? That means Google has the advanced move for us for the better just like chess game.

I think so. Not much of us are happy after the Penguin releases. But I think Google just did a good job, this means that they are on our side guiding us in the right path over the internet marketing. Question: Why is it that some sites are still ranking on the first page of Google after they hit by Panda and Penguin? That means Google has the advanced move for us for the better just like chess game.

Marie is there any way to speed up recovery specially for Sitewide link penalty? Coz as John says Google takes time to recrawl the web. So what if we can update through sitemap to speed up the recrawl?? Do you have any ideas about sitewide link solution which can be speed up?

Unfortunately, in most cases, recovery from Penguin is not possible until Google refreshes the Penguin algorithm. The last one was October 5, 2013. When we have to wait for months in between algo refreshes, it makes a quick recovery quite unlikely.

I really don’t understand Google’s technique, becz on one hand they are able to deliver better results than bing in search after crawling billions of pages and on the other hand they say that we need to wait for a penguin refresh. There penalties are quick and for recovery one needs to wait for a refresh?, really i don’t know what tech are they are talking about? Why is Google not stressing out more for recovery process than in the attack mode?

Google will always concentrate on what is in Google’s best interest. Recovering websites that have previously cheated their way to the top is probably not their top priority!

I agree there is lot of spam going on, but in reality there are lot of penalties which needs to be checked for example the sitewide penalty which is created mostly from footer or sidebar or themes links. Don’t u think that those sites which have been affected and have removed those links should be given a chance to prove?

Marie is there any way to speed up recovery specially for Sitewide link penalty? Coz as John says Google takes time to recrawl the web. So what if we can update through sitemap to speed up the recrawl?? Do you have any ideas about sitewide link solution which can be speed up?

Unfortunately, in most cases, recovery from Penguin is not possible until Google refreshes the Penguin algorithm. The last one was October 5, 2013. When we have to wait for months in between algo refreshes, it makes a quick recovery quite unlikely.

I really don’t understand Google’s technique, becz on one hand they are able to deliver better results than bing in search after crawling billions of pages and on the other hand they say that we need to wait for a penguin refresh. There penalties are quick and for recovery one needs to wait for a refresh?, really i don’t know what tech are they are talking about? Why is Google not stressing out more for recovery process than in the attack mode?