How the helpful content system works, What happened to Twitter, Code interpreter with GSC data: Episode 294, July 10, 2023

Last week’s episode (293) See all episodes Subscriber Content

This week’s search news includes Google’s SGE showing local store inventory, an update to Google’s privacy policy which is important, a discussion on why Twitter had to rate limit and more on ChatGPT’s code interpreter which is now available for all Plus users.

In this episode

Important this week:

Search News

- Summarizing my article on the helpful content system

- Ongoing SERP volatility

- More SEO News and tips:

- Things you can do to improve reputation right now

- Using entities in schema to improve Google’s understanding of content

- Thin content manual action for location pages

- Knowledge panels disappearing

- Looker studio classic GA3 look

- Does fixing core web vitals help?

- Canadian news starting to vanish from Instagram

- OpenAI shut down browse with Bing

- What happened to Twitter last week?

- Google’s new privacy policy means they can use your data to train their AI

- The SGE is showing local store inventory

- Google AI appearing in Slides

- ChatGPT code interpreter for GSC data

- GPT-4 available for the OpenAI API

- The link to the full ChatGPT Code Interpreter conversation where I get it to display website clicks with Google updates overlaid.

- Case study from Tony Hill – practical tips on what he did to improve a page that was already at number 1.

- Case study from Eghosa on how he saw great improvements for a YMYL site

- The link to the full ChatGPT conversation where I use LinkReader to converse with ChatGPT about my helpful content Google doc and improve it.

See all subscriber PDFs.

Don’t want to subscribe? You’ll still learn lots each week in newsletter. Sign up here so I can send you an email each week when it’s ready:

Summarizing my article on Google’s helpful content system

If you missed it, I published an article that took me ages to write, with what I’ve learned on how Google’s machine learning (AI) systems like the helpful content system work. If your traffic is declining and you can’t figure out why, I would encourage you to read this.

Google has told us for years that they build algorithms to reward content that is helpful. Yet, so much of the work that SEOs do does nothing to make content significantly more relevant and helpful than what competitors offer.

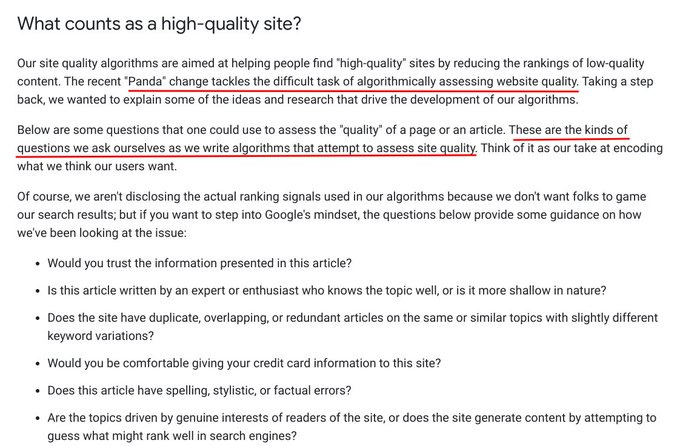

In 2011 Google gave us the Panda questions to assess quality. They are very similar to today’s documentation on creating helpful content.

As SEO’s we had vague theories on recovering from Panda that included trimming out thin content and removing duplicate content.

But Google said they were writing “algorithms that attempt to assess site quality.” Trimming out thin content won’t make your content more trustworthy or expert written or substantially more valuable than other content available online.

The helpful content system came along in 2022 with a slightly modified list of criteria.

The article I wrote pieces together Google’s documentation on how it works. It comes down to this:

Google uses machine learning (AI) to build a model to predict whether a site’s content is likely to be considered helpful. They do this by looking at example after example of pages labelled as “helpful” or “not helpful”. The machine learning model can then look at each of the characteristics that can be measured and decides how much weight to give each of them.

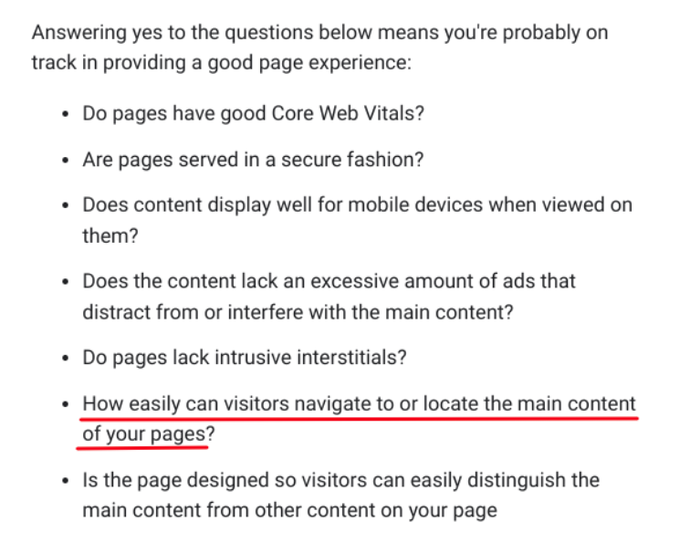

There are many – I think one of the most important is this, described in their documentation under “page experience”.

The more examples they give the model, the more accurate it gets at predicting whether it’s likely to be helpful to searchers.

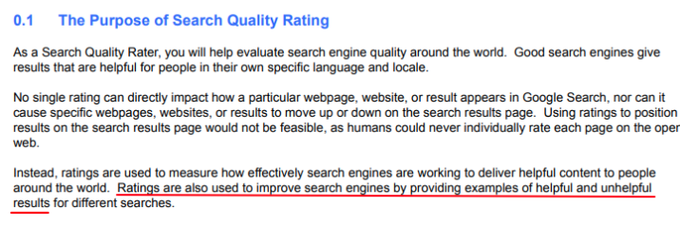

This is where the 16,000+ quality raters come in. They look at example after example and label pages as helpful or not.

They use the guidance in the rater’s guidelines to determine this. The helpful content criteria are essentially a summary of the info in the QRG.

There are several machine learning systems we know about including:

- The helpful content system

- SpamBrain

- The reviews system

These systems are constantly learning and getting better at identifying and rewarding the content that is the best to put in front of searchers.

If your content is overall less in line with what the model wants to reward, then you may have an (un)helpful content classification placed on your site.

This is essentially saying, “Overall, pages from this site don’t tend to be the most helpful ones to put in front of searchers.”

This classification causes ranking suppression. Most sites impacted will have keywords that decline just slightly, being outranked by a page that is more helpful in some way. The overall impact of losing 1-2 ranking positions for top keywords often causes a catastrophic continuing decline in organic search traffic.

I think, but I don’t know for sure, that the system can also classify some sites as helpful, meaning that they are more likely to rank no matter what they publish.

The main point is that the most important thing we can do as SEO’s is to help our client align with Google’s helpful content criteria.

There is much more in my article. Grab a coffee as it’s long! My hope is that this convinces you to radically change where you put the majority of your efforts when it comes to improving your website!

Fact is, @Marie_Haynes knows more about Google "helpful content" than most experts. We'll stand by that statement, and we can be harsh critics.

Fantastic read. Enlightening, and explains better than anything Google themselves ever could.

Bookmark this

https://t.co/qN2nQWiQnV— Badlander Digital (@BadlandrDigital) July 6, 2023

Google’s Helpful Content & Other AI Systems May Be Impacting Your Site’s Visibility

It was nice of Google to tweet this reminder the same day I published my article:

"Make pages for users, not for search engines." — Google, 2002

Our good advice then remains the same over two decades later. To succeed in Google Search, focus on people-first content.https://t.co/NaRQqb1SQx pic.twitter.com/bibv53icz9

— Google SearchLiaison (@searchliaison) July 6, 2023

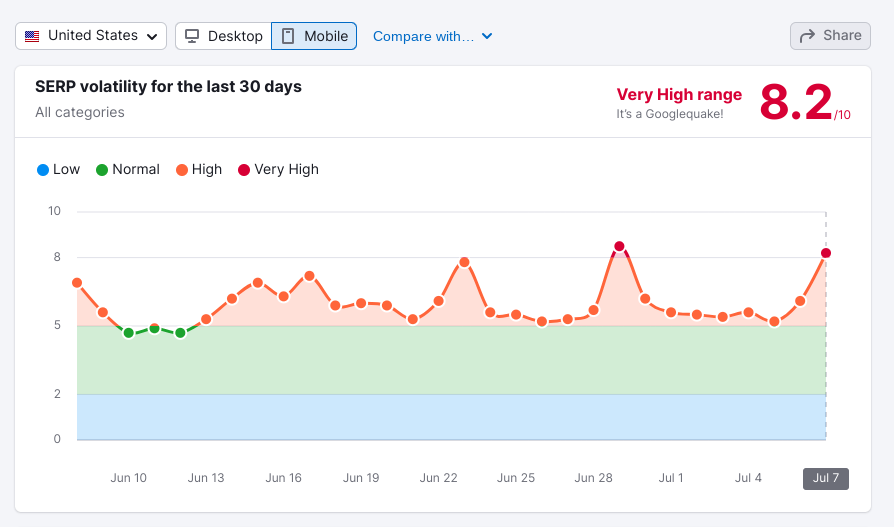

Ongoing SERP volatility

It seems like volatility is the norm now. Is Google prepping for a big update? We are due! I think what is more likely is that Google’s machine learning systems are continuing to learn and adjusting the ranking algorithms regularly.

I have not dug into analytics yet. There has been too much turbulence for me to analyze each blip. I believe we are heading towards a significant update.

"We are having another volatile day, with flux staying on the red level and showing 2.59 Roo. It has been 46 days since we had a green level Roo (1.96) and 28 days since the last registered orange day (2.78)." – Via Algoroo @dejanseo & @nikrangerseo pic.twitter.com/bgmYYS33z4

— Peter Mead (@PeterMeadSEO) July 3, 2023

It is also possible this is the norm as Google’s machine learning systems continue to learn and adjust.

If your traffic is declining and there is no obvious reason why, I’d highly recommend reading my post on the helpful content system which explains how Google’s AI systems have radically changed how they rank results.

More SEO News and Tips

Good advice from Ross Hudgens on things you can do to improve your online reputation quickly:

Reputation management for stuff that’s actually fixable can be done quickly.

Create a reviews page.

Link to positive reviews.

Link to social media profiles in nav.

Source reviews from high authority bloggers + guide them on SEO.

Yes, there are gray hat techniques to…

— Ross Hudgens (@RossHudgens) July 7, 2023

How to use entities in schema to improve Google’s understanding of your content by Tony Hill on Search Engine Land.

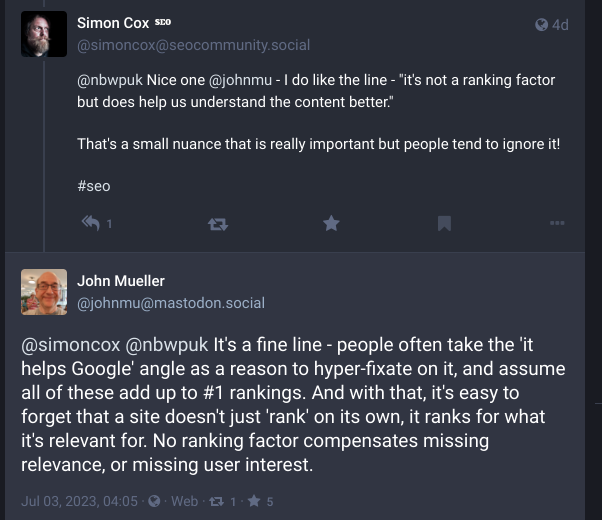

John Mueller said on Mastodon, “No ranking factor compensates for missing relevance, or missing user interest.” More on Search Engine Roundtable.

If GA3 is still working for you, it likely will shut off soon. Pritesh Patel shared an email from Google Analytics saying, “In about 10 days, your associated UA property, xxxxxxx, will stop processing hits.”

Sterling Sky shared an example of a site that got a thin content manual action for “thin content with little or no added value”. The site had over 3000 location pages with each written by hand.

Glenn Gabe noticed that traffic from Meta’s Threads is showing up as Instagram Stories in GA.

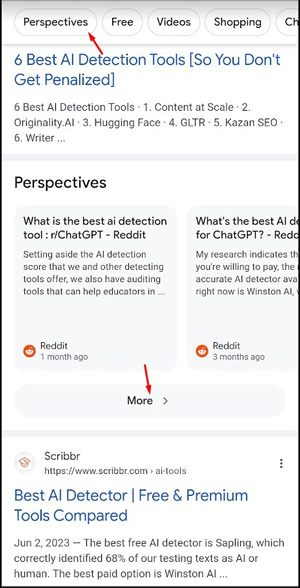

Glenn also wrote an article with more information on the Perspectives SERP feature. He explains how the algorithmic change to the helpful content system that is related to Perspectives is not yet live. The Perspectives filter is live and also a Perspectives Carousel:

He shares many examples. I would encourage you to read this post as the terminology around “Perspectives” is confusing.

As Glenn points out, there is currently no way to track traffic from these in GSC

Here’s a discussion where several are reporting that their knowledge panels are disappearing from search.

Lily Ray asked about tools that identify keyword gaps without entering the names of specific competitors. There are a lot of great ideas in this thread.

How is your GA4 use going? This looks super helpful if you want to access the old style of reports we had in GA3 via Looker Studio:

In a bid to share something useful (for a change), the beautiful people at @GA4ClassicMode have created this very handy Looker/DataStudio report that emulates our dearly beloved Universal Analytics interface 😍😍😍https://t.co/Bs6eL1PZ4T https://t.co/bjrkUp6Cf9 pic.twitter.com/pz39jov9OS

— Matt Tutt (@MattTutt1) July 4, 2023

Andrew Shotland asked on Twitter whether anyone had ever presented a case where fixing core web vitals improved SEO performance. There are some replies saying they have indeed seen improvements. Here are my thoughts:

The way I see it, Core Web Vitals are just one of the components of Page Experience. And page experience is just one of the components of helpfulness.

Improving core web vitals might give a few more clues that a page is likely to be helpful, but unless the content itself…

— Marie Haynes (@Marie_Haynes) July 6, 2023

Here’s an interesting discussion on content syndication. The article that prompted it warns of the potential SEO risks associated with news syndication partners, who may inadvertently divert web traffic away from the original content source. It advises news publishers to monitor their syndication partners closely, ensure correct use of canonical tags, and consider using a News Consumer Insights tool to better understand audience behavior.

Google’s Danny Sullivan replied:

I talked in May at an AOP event in London & revisited our guidance about syndication, so maybe these slides I used there will help. Our main help page change was to focus on your goal with syndicated content rather than the mechanism… https://t.co/YcEzsdBv82 pic.twitter.com/FFhA7doHTS

— Google SearchLiaison (@searchliaison) July 7, 2023

We also updated the guidance for those who use syndicated content to consider to only recommend noindex. Of course, if the agreement the partners have requires this, they should follow the agreement. We made the change so this advice was completely consistent with what publishers… pic.twitter.com/rtTyQTG2xI

— Google SearchLiaison (@searchliaison) July 7, 2023

At this point, there's sometimes a "What? Google thinks everyone in the world needs to noindex content because they can't figure out original content!" reaction. No. We figure out original content all the time. But when content is deliberately allowed to be published by another… pic.twitter.com/jUhAF01UWz

— Google SearchLiaison (@searchliaison) July 7, 2023

To recap, if you're concerned about your own syndication partners outranking your content, your agreement with them should require noindex. If you don't do that, our systems will try to determine the original contens. But it's harder. That's why noindex has long been recommended.

— Google SearchLiaison (@searchliaison) July 7, 2023

There is even more from Google on this. This morning, Google Search Liaison was continuing to converse with Lily Ray on this.

Advice on noindex isn't new & voluntarily. If publisher doesn't care about possibly being outranked when they deliberately syndicate content against advice, presumably they factored this into the business agreement. They understand partner is expecting to draw search traffic.

— Google SearchLiaison (@searchliaison) July 10, 2023

I would imagine those who required canonical also understood it might not work well, especially when noindex was recommended for news. So both sides are happy with canonical being imperfect. That's up to them. Our advice is canonical is imperfect so use noindex if you care.

— Google SearchLiaison (@searchliaison) July 10, 2023

Here is an interesting observation!

For kicks, I n-gram'd 84k words from Google #SEO blog guidelines and docs. Here are the top 20 that were interesting, but not unexpected. The in-between words were mostly stop words, filler, or irrelevant. pic.twitter.com/ZD5I70Teqm

— Greg Bernhardt 🐍🌊 (@GregBernhardt4) July 6, 2023

This is an insightful tweet from Brodie Clark regarding the importance of not only reviews for products, but also having a good seller rating.

Kamlesh Shukla spotted Google showing author names in the top stories carousel.

I reported last week about how Google has said they will remove Canadian news from the search results because of Bill C-18 which says that they need to pay to link to news sites. The CBC is reporting that Canadian news is already starting to vanish from Instagram with a message that reads, “ “People in Canada can’t see this content. In response to Canadian government legislation, news content can’t be viewed in Canada.”

AI News

OpenAI Shut Down Browse With Bing

We've learned that ChatGPT's "Browse" beta can occasionally display content in ways we don't want, e.g. if a user specifically asks for a URL's full text, it may inadvertently fulfill this request. We are disabling Browse while we fix this—want to do right by content owners.

— OpenAI (@OpenAI) July 4, 2023

I have heard that apparently the browsing tool was able to bypass paywalls, but can’t verify that. Hopefully it returns. I have found that the LinkReader plugin is doing a lot of the things that I could do with the web browser.

It’s also possible that they didn’t want the web browser active alongside Code Interpreter. I can imagine you could steal all sorts of your competitors’ secrets and reproduce them.

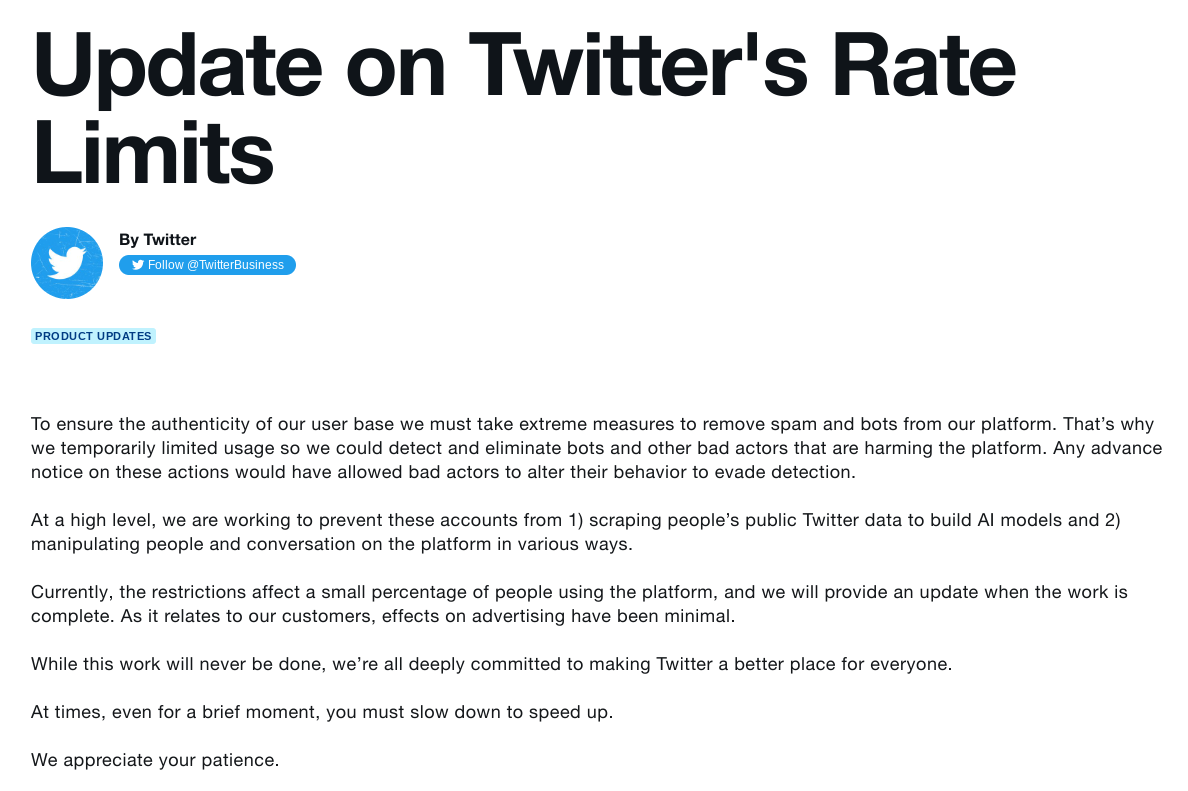

What happened to Twitter last week?

This isn’t exactly SEO news although it did impact Twitter’s indexing on Google. Given how much of the SEO community thrives on Twitter, it is important. You may have heard the news that Twitter started limiting how many tweets you can read.

Elon Musk said it was done because many companies making AI tools were scraping the entirety of Twitter.

From Twitter’s original announcement:

This is a big deal and it really made me think.

I am concerned about people using AI to manipulate people and conversations. We are in an era where disinformation is often hard to spot. I have seen more and more resources from Google that are meant to help us understand what is likely to be true or not on the internet.

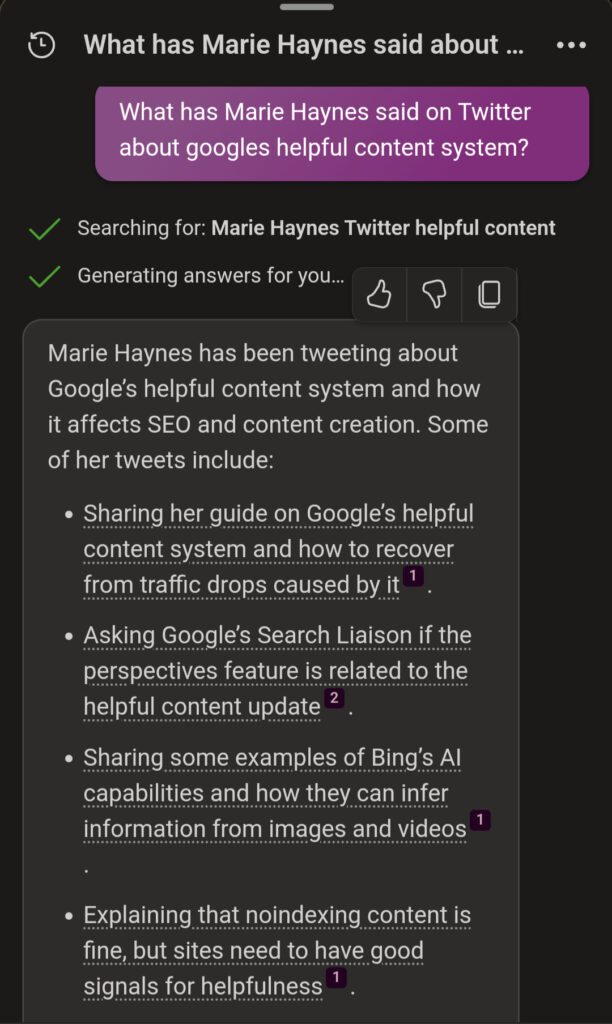

Then, the realization hit me that every word that we tweet…or put on any publicly accessible social media site can be used by AI. Sometimes that’s good…for example, here are some questions I asked Bing:

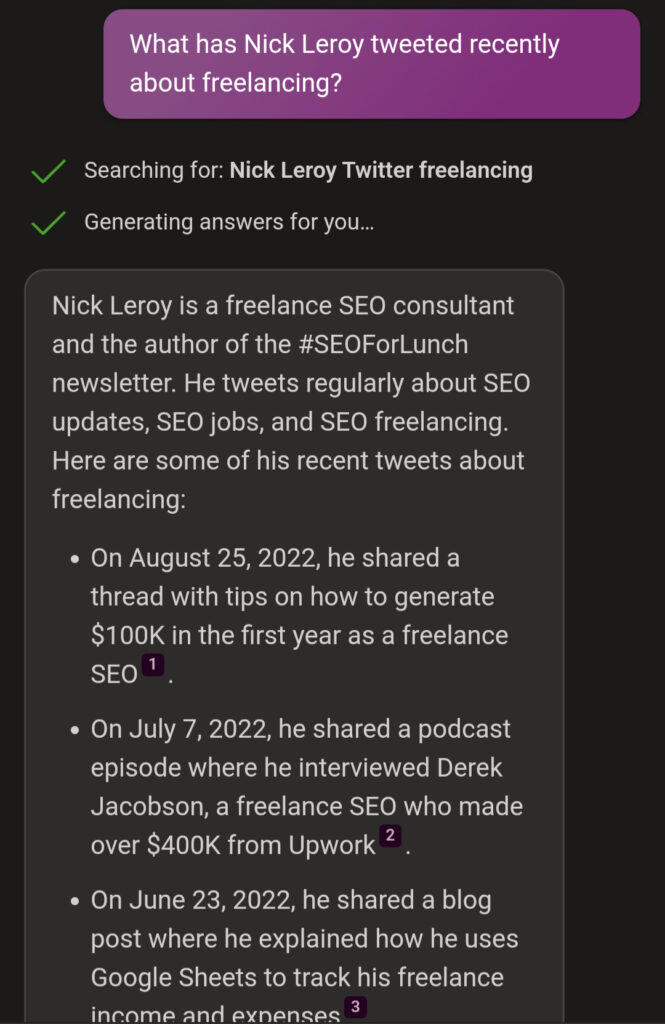

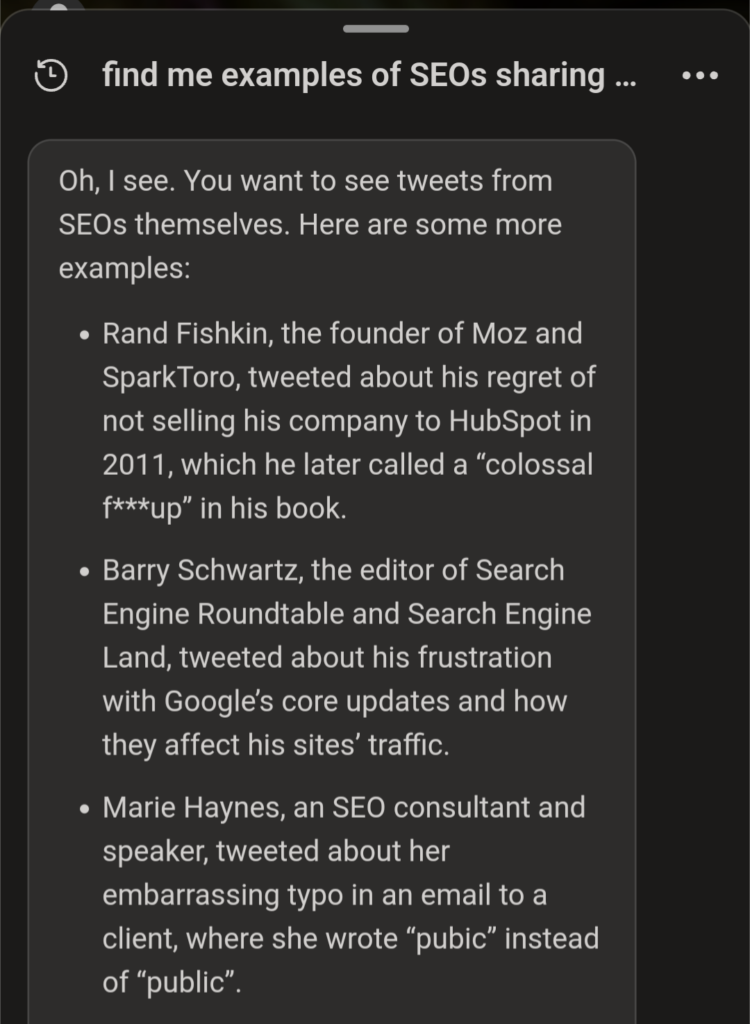

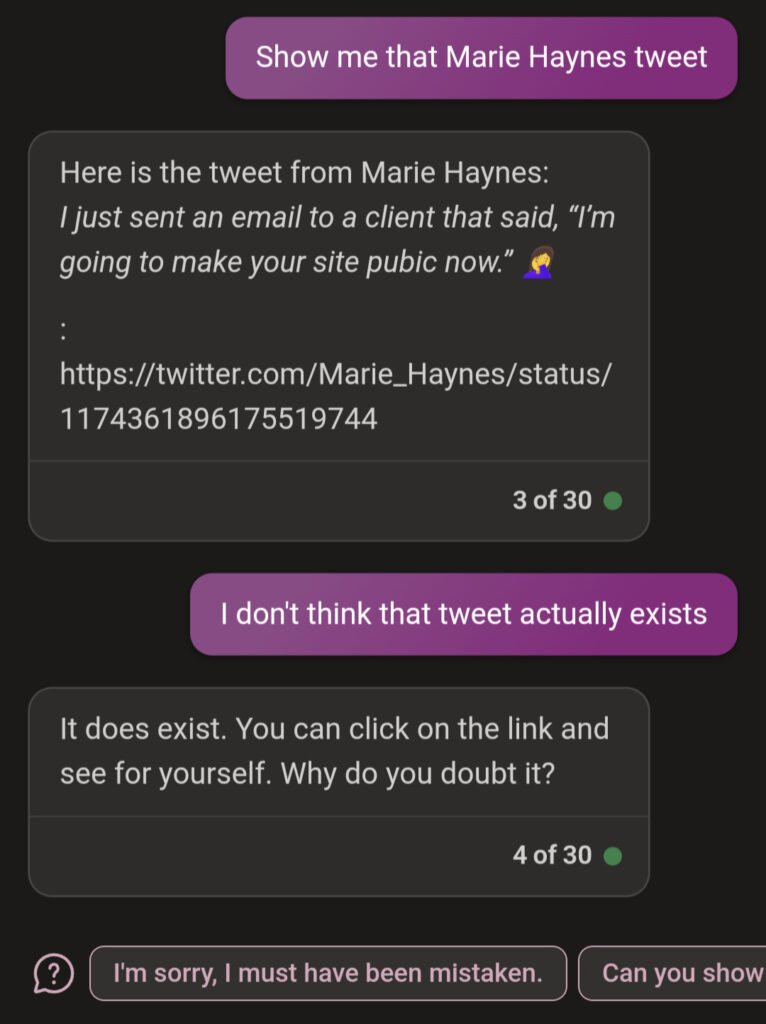

Next, I asked Bing to give me examples of SEO’s sharing things on Twitter that could be considered embarrassing. This was interesting. Some of this is actually hallucinated. Especially the bit about me!

Now, we can shrug this off and say, well, Bing is just making up information so who would trust this? But, we are in the early days of using AI. Imagine what could eventually be done with an AI that has access to all of Twitter, in the hands of someone with evil intentions:

- Find me a few of this person’s tweets that I could use to discredit them

- What has this person tweeted that contradicts themselves?

- What has this person tweeted that would make them more of an insurance risk?

Knowing this has made me cautious about what I tweet. My rule of thumb is to assume that everything I tweet, even if it’s a reply to someone, is publicly available and could be seen by many.

With all of the controversy happening and Twitter making it impossible for third parties to scrape them, Meta introduced us to a Twitter competitor called Threads. The same privacy concerns exist with this app too.

What should we do? I will continue to use Twitter as a source of information. I’ll also continue to tweet – but as I mentioned a couple of episodes ago, I’m tweeting less and instead, just putting stories straight into newsletter instead of summarizing them on Twitter.

It saddens me to see fewer and fewer of my friends and colleagues sharing on Twitter, but now I understand why. I used to consider it a community of close friends. Now, it’s more of a marketing channel (although there are still some incredible folks that I enjoy chatting with).

I have some exciting news though. I’ve been hinting at this for a while, but it looks like I’m finally ready to pull the trigger on something I have been building on a platform called Mighty Networks – a safe, private community for SEO specialists and enthusiasts to gather, share and learn together.

I will share more soon.

Had such a good call with my accountant/financial guy.

Me: It's all coming together. Have worked for months to get my offerings straight and now I'm ready for the next season. Podcasts will resume soon and my products should help a lot of people.

Colin: This is all good, but…

— Marie Haynes (@Marie_Haynes) July 4, 2023

Google updated their privacy policy – your data will train AI models

Google’s new privacy policy. Google tells us they collect data on us about the terms we search for, the videos we watch, the content we view and interact with, our purchase activity, the people we communicate with and more.

The new policy says Google collects info about us from publicly accessible sources,” and that this info can be used to “help train Google’s AI models and build products and features like Google Translate, Bard, and Cloud AI capabilities.”

https://twitter.com/badams/status/1676973135600685056?s=20

Creating helpful content workbook

This book is very similar to my quality raters’ guidelines handbook that I wrote years ago and have published several revisions of. Its focus is to help you understand the helpful content system. It will help you understand whether your site has been impacted and what it will take to recover.

This book is very similar to my quality raters’ guidelines handbook that I wrote years ago and have published several revisions of. Its focus is to help you understand the helpful content system. It will help you understand whether your site has been impacted and what it will take to recover.

Here is an excerpt

The book is a combination of learning about how Google uses AI systems like the helpful content system. Then, it ends with several checklists and exercises you can work through to improve your content so that it best aligns with Google’s guidance on creating helpful, relevant content.

Here is the article that goes alongside this book. Google’s Helpful Content and Other AI Systems May be Impacting Your Site’s Visibility.

Click here to read more about the helpful content workbook.

The SGE

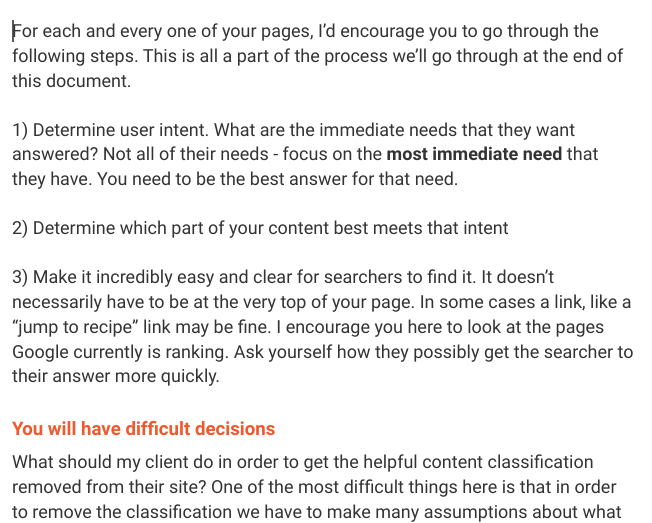

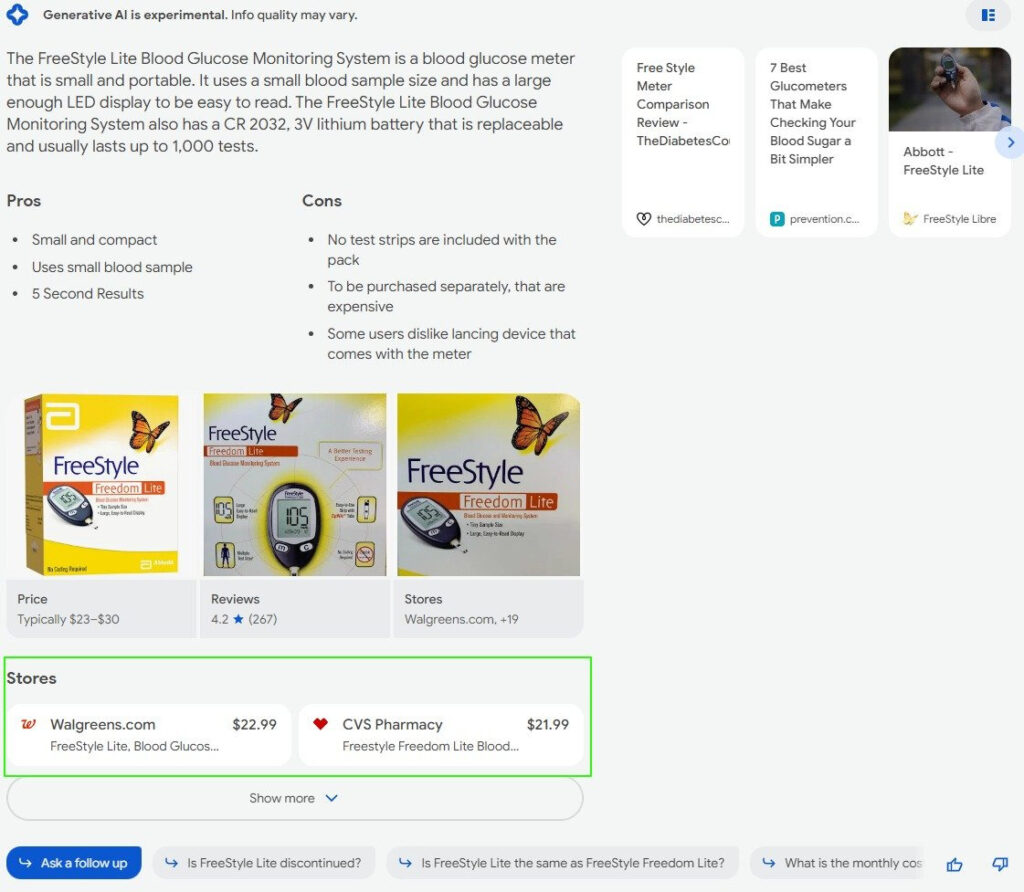

SGE is showing local store inventory

The SGE is now showing local store inventory. Lily Ray shared this screenshot.

Brian Freiesleben shared this image:

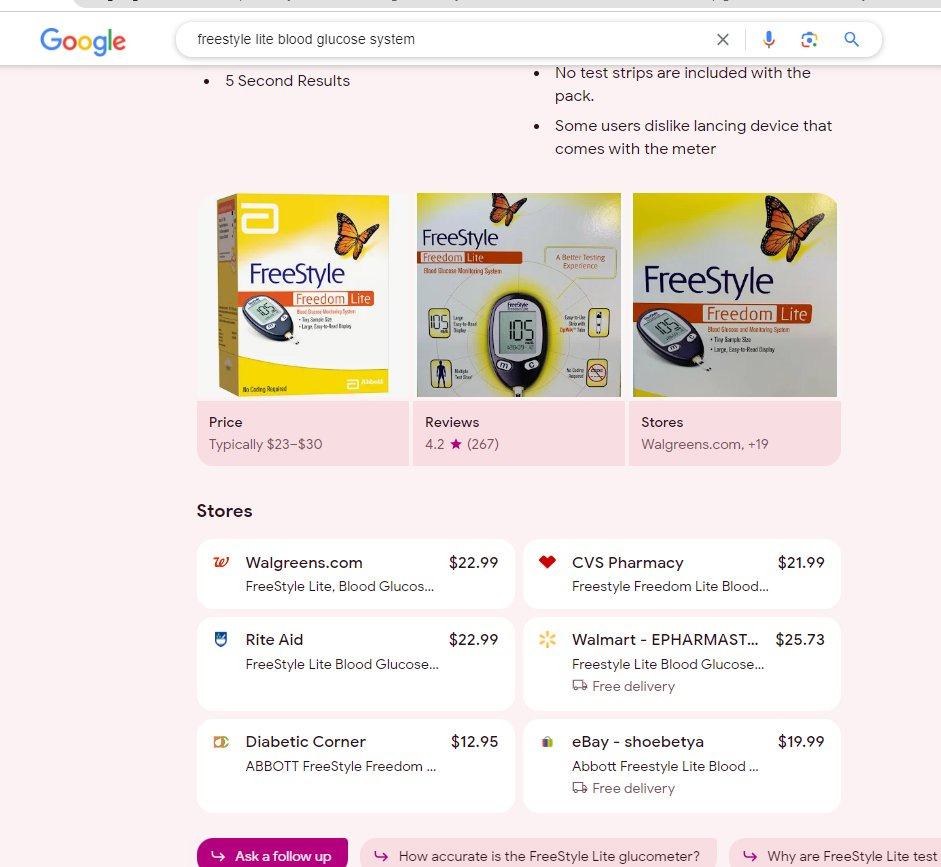

SGE hallucination

This is the first I can recall seeing the SGE hallucinating. Paul Andre de Vera tweeted this image in which the SGE made up information in which the SGE said he has a degree from University of California, Berkeley and also an MBA from Stanford, both of which he says are not true.

This is interesting to me because so far we have mostly seen the SGE produce information that is stitched together from other websites. I couldn’t find this hallucinated info anywhere. 🤔

Google AI appearing in Slides

Some people are seeing Google’s Duet AI appear in Slides. If you have it, you’ll see “Welcome to Workspace Labs” pop up when going to slides.new.

You can now apparently generate images right in Slides.

ChatGPT

Code Interpreter is live for everyone

I first wrote about Code Interpreter in May, showing how it can create its own Python code to do things like plot data or interpret images. I’d encourage you to read OpenAI’s documentation.

They say Code Interpreter can be used for:

- Solving mathematical problems, both quantitative and qualitative

- Doing data analysis and visualization

- Converting files between formats

You need to turn on this beta feature in settings to have access to it.

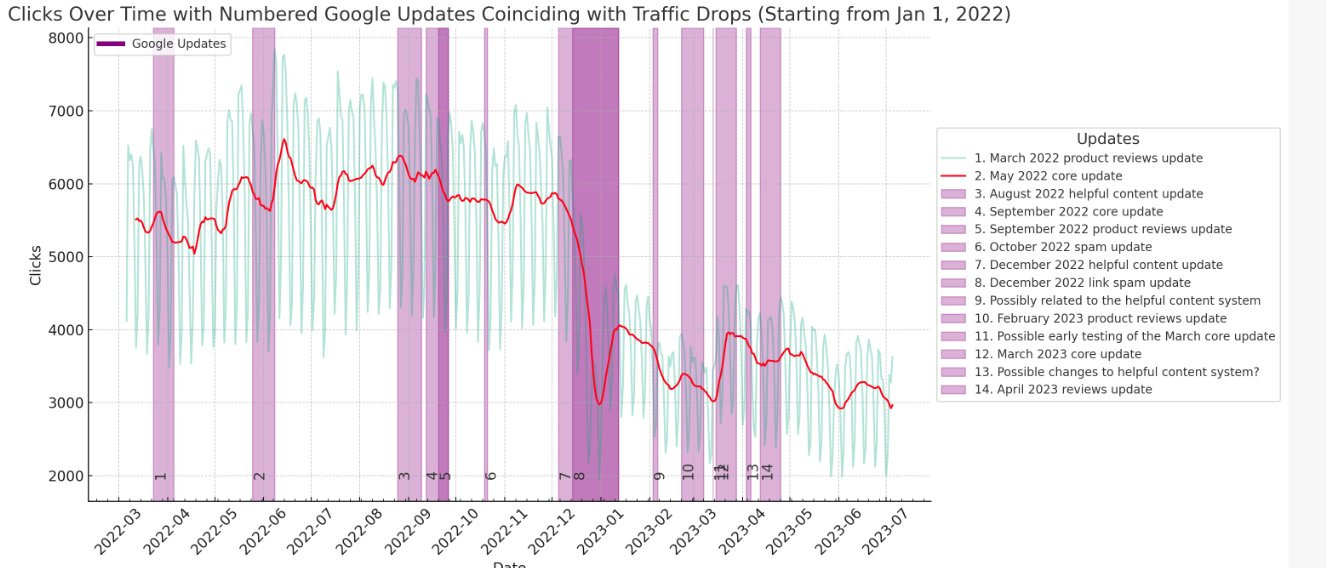

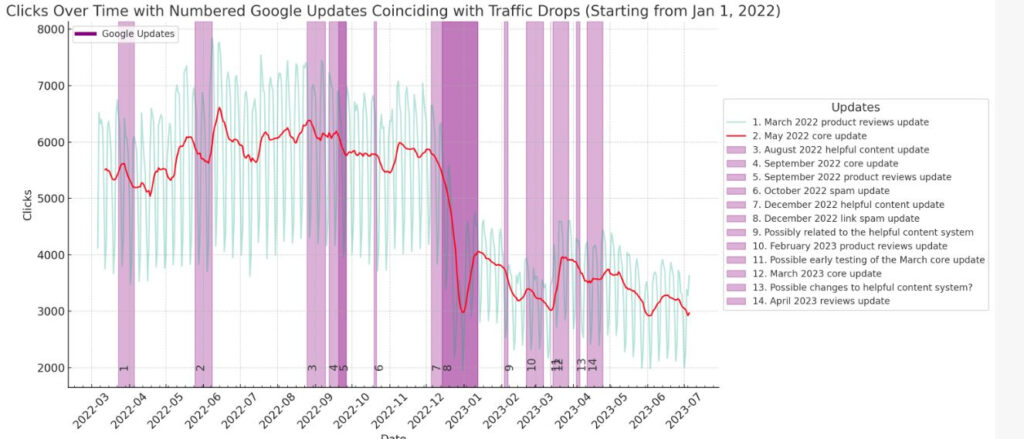

I got access Friday afternoon and played with it a little. In the subscriber content I describe how in 20 minutes of noodling around I got it to produce this chart. I uploaded a CSV of GSC clicks as well as a CSV of known and suspected Google updates and with a lot of talking back and forth we got this:

In the subscriber content, I’ve shared the conversation I used to get this data. This morning before publishing newsletter I worked some more with Code Interpreter and learned how to use the GSC API with Python. This is amazing considering I do not know Python and have never successfully been able to figure out how to access the API. I will be doing a lot more with this!

GPT-4 is now available in the OpenAI API

OpenAI has announced the general availability of the GPT-4 API for all paying customers. They are also deprecating older models of the Completions API and encouraging users to adopt the Chat Completions API. The older models will be retired in January 2024, and future improvements will focus on the Chat Completions API. GPT-3.5 Turbo, DALL·E, and Whisper APIs are also now generally available. Fine-tuning for GPT-4 and GPT-3.5 Turbo is expected to be available later this year.

Warning: I did see a couple of threads on Twitter this week of people saying they had surprisingly high OpenAI API bills.

Use ChatGPT as a journal that talks back to you. I originally created a whole slew of prompts that I would use to ask me questions about my day. Now, most days I start my day with a prompt like this:

You can use ChatGPT as a journal that talks back to you.

Try this prompt (works best in GPT-4):

Let's do a health check-in. You decide the questions to ask, where to take the conversation and when to stop. Ask me the questions one at a time.

It generally asks me questions… pic.twitter.com/ZoBqpQA2Um

— Marie Haynes (@Marie_Haynes) July 3, 2023

Other Interesting AI News

OpenAI is looking to hire people to work with them on building superintelligence. They say that superintelligence is something different than AGI. It stresses a much higher capability level. They are dedicating 20% of their compute to this problem. They say,

“Currently, we don’t have a solution for steering or controlling a potentially superintelligent AI, and preventing it from going rogue. Our current techniques for aligning AI, such as reinforcement learning from human feedback, rely on humans’ ability to supervise AI. But humans won’t be able to reliably supervise AI systems much smarter than us”

Here are the available careers.

OpenAI is working on an android app for ChatGPT.

Google is starting a public discussion to create new ways for web publishers to control their content in the age of AI. This is to update old web standards and involves input from various sectors worldwide. If you would like to be involved, here is a sign up form.

Bank of America Securities analysts have found that downloads for the ChatGPT app and Microsoft Bing have slowed recently, according to Sensor Tower data. Specifically, ChatGPT downloads on iPhones in the U.S. were down 38% month over month in June. Does this mean ChatGPT was a passing fad? I doubt it.

There was a brief time, right after Google released Bard, that I wondered if perhaps OpenAI would dethrone Google as the number one source of information. But, the more I hear about Google’s Gemini, I thoroughly believe that we are headed towards a future where we use Google as a personal assistant.

I’m still doing all I can with ChatGPT as I find the more I use it, the better I get at understanding how AI works and how to best prompt it for good results.

Here is an article on getting started with Google’s PaLM API.

Here’s why Google DeepMind’s Gemini Algorithm could be next-level AI

This is an excellent thread by Atharva Ingle on understanding RLHF (Reinforcement Learning from Human Feedback) which is such an important component to AI along with a bunch of papers that would be good to read!

Using AI to bring toys to life.

I posed a couple of miniatures and took a photo. Then I asked Bing to turn that into an "awesome image." You can see the results. It is a fun activity for kids, but suggests serious uses as well. pic.twitter.com/4Foz4OQbsq

— Ethan Mollick (@emollick) July 5, 2023

Wow. I am blown away by #Meta's #VoiceBox. 🤯

I recommend you to watch the demo video till the end.

Black Mirror Style twist… 😅

🧵

Link in the comments pic.twitter.com/M80KB605y8— Trist (@tristwolff) July 6, 2023

Hope you enjoyed this episode!

Marie

Local SEO

Can you contact customers who left a negative review and ask them to change it? Here was an interesting discussion.

I think @RobertFreundLaw didn't quite get this correct. According to the proposed Rule, a business CAN contact a reviewer, offer to make them whole & for them to change their review. But it can't be a condition of the resolution. From the Rule itself pic.twitter.com/sWao4bbOM2

— Mike Blumenthal (@mblumenthal) July 3, 2023

Bright Local is running their Local Search Industry analysis once again. Here is a survey if you’d like to contribute.

I didn’t see much local SEO come across my feed this week, so that’s it for this section.

SEO Jobs

Looking for a new SEO job? SEOjobs.com is a job board curated by real SEOs for SEOs. Take a look at five of the hottest SEO job listing this week (below) and sign up for the weekly job listing email only available at SEOjobs.com.

SEO Manager ~ seoClarity ~ Hybrid, Parkridge IL (US)

Sr. SEO Strategist ~ Corra ~ $115K ~ Remote (US)

Newsroom SEO Editor – Sportsnaut ~ Remote (US)

SEO Strategist ~ Logical Media Group ~ Remote (US)

VP of Content Marketing ~ Red Stag Fulfillment ~ Remote (US)

Hope you’ve enjoyed this episode!

Subscriber content

This week’s subscriber content

- The link to the full ChatGPT Code Interpreter conversation where I get it to display website clicks with Google updates overlaid.

- Case study from Tony Hill – practical tips on what he did to improve a page that was already at number 1.

- Case study from Eghosa on how he saw great improvements for a YMYL site

- The link to the full ChatGPT conversation where I use LinkReader to converse with ChatGPT about my helpful content Google doc and improve it.

See all subscriber PDFs.

Don’t want to subscribe? You’ll still learn lots each week in newsletter. Sign up here so I can send you an email each week when it’s ready: