SNYCU Ep. 200 - Sept. 9, 2021

In this episode, we celebrate our 200th episode! We want to thank all of our readers so much for their support. This week the MHC team shares their favourite SEO tips, we discuss internal linking, prerendering, how to present new content to Google and lots more!

SNYCU Podcast

Podcast is on a break

If you would like to subscribe, you can find the podcasts here:

Apple Podcasts | Spotify | Google Play | Soundcloud

Ask Marie an SEO Question

Have a question that you want to ask Marie? You can ask them on our Q&A with Marie Haynes Consulting page and Marie will answer some of the best questions each week in podcast!

News about Google’s Algorithms

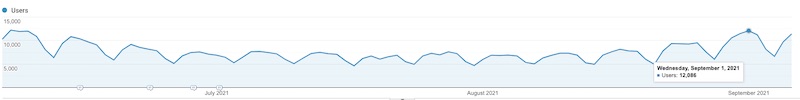

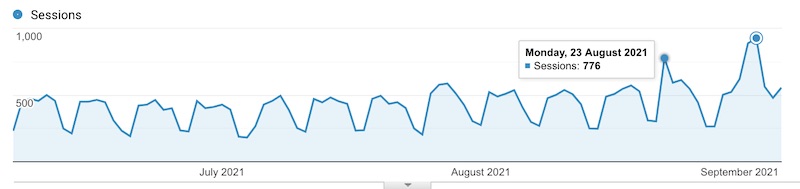

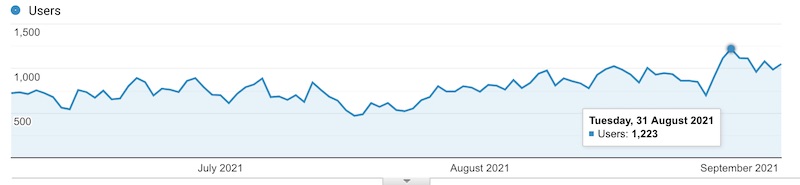

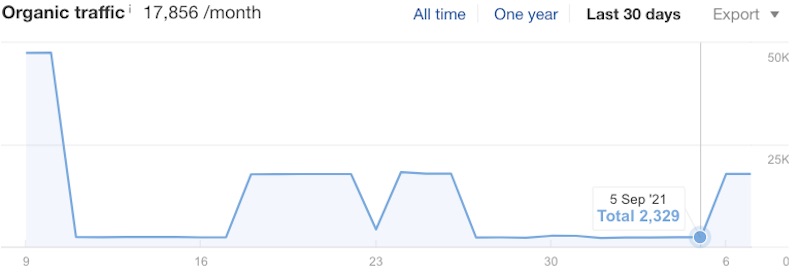

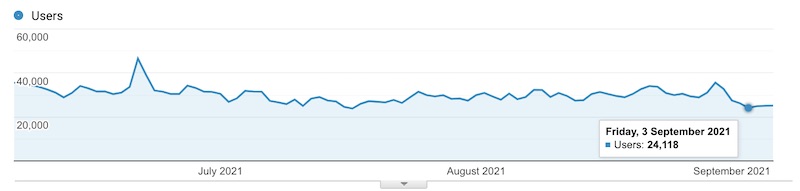

There have been a couple reports of some notable volatility in the search results over labour day weekend. Glenn is reporting seeing some interesting boosts to discover traffic for a site that has been dropping in and out of discover over this summer’s numerous updates:

Re: the latest volatility, I believe Google could have refreshed some type of site-level quality signal. E.g. This site showed up in Discover for 1st time w/Product Reviews Update, dropped out w/June Core Update, then surged again with 9/1 unconfirmed update. Interesting example: pic.twitter.com/t6KPGcFBTb

— Glenn Gabe (@glenngabe) September 6, 2021

Glenn suggests that this may be indicative of a refresh of some site-level quality algorithm.

Barry is reporting that there has been less chatter than at the start of the month, but says there are still some folks reporting drops in the WebmasterWorld forums. Notably, almost all of the weather tracking tools showed a spike around September 4th 2021 (which is of course Labour Day).

Google search ranking algorithm update on September 4th – Labor Day weekend update? https://t.co/VsXyQos5cV pic.twitter.com/fzunSVIEih

— Barry Schwartz (@rustybrick) September 6, 2021

After digging into the hundreds of sites that we monitor, this week we did see some of the improvements around September 1st, 2021. Last week we thought that they were perhaps seasonal but they seem to have sustained themselves. Here are some examples:

Looking at movement on September 4th, which is what Barry and Glenn are reporting, we did see some movement in a couple different niches:

Again, as with the last few “suspected algorithm updates”, we are not seeing this for the majority of the sites we monitor. Our suspicion here is that these variances could be from a number of things.

They could be the result of very small algorithmic adjustments (which happens multiple times a day!), but at the same time they could also be the result of improved CTR if a higher ranking competitor’s Title Tag has been altered in a “negative” way as a result of the changes occurring there over the past few weeks.

It is also possible that a major competitor has seen an unforeseen drop due to a technical issue or perhaps receiving a manual action, which has in turn opened up the SERP competition.

Overall, since we are only seeing a small number of changes across hundreds of sites, we don’t currently believe this is a notable update. However, if you have seen significant changes to your traffic over the weekend we would love to hear from you!

MHC Announcements

Thank you for 200 episodes!

We made it! The MHC team wants to thank you greatly for supporting us and SNYCU for 200 episodes! As a thank you for your support we’ll be hosting two giveaways; one to win an hour of consulting time with a senior analyst, and other to win a one-year subscription to the premium version of SNYCU.

So how do you win? We’ll be hosting the draw in next week’s newsletter episode so make sure you’re keeping an eye on episode 201. All subscribers will be automatically entered to win, but premium subscribers will have an additional entry. If you’ve been contemplating upgrading for even more news, insight and industry tips, this is a great opportunity to do so.

For full contest rules and regulations, please visit our contest rules here.

Google Announcements

The page experience rollout is complete

Approximately six weeks after it began rolling out, Google has publicly announced that the page experience update has concluded. In Google’s April post about this update, they mentioned that the update wouldn’t play its full role as part of those systems until it was entirely rolled out.

Despite efforts to push webmasters to create better user experience, page experience is one of many factors and Google does not anticipate any ‘unexpected or unintended’ issues.

In our perspective, we sense that Google may place greater emphasis on this signal (and items within this factor) over time. We’d be willing to bet that Google has much more on the horizon, but for now, this feels like a modest change.

The page experience rollout is complete now, including updates to Top Stories mobile carousel. Changes to Google News app have started to rollout as well and will be complete in a week or so.

— Google Search Central (@googlesearchc) September 2, 2021

Google has updated their How-To schema guidelines

Thanks Brodie for keeping us in the loop! John shared that Google has updated their guidelines for HowTo schema. At MHC we are big schema people, and highly recommend implementing suitable options on your website. If you’re new to schema and have how-to pages on your website, this guide is for you.

Heads-up: Google just updated their How-to Schema guidelines re desktop support. h/t @suzukik https://t.co/uQ3RONkpX6

This is just a doc update though – support first started almost a year ago: https://t.co/e1XHGatZIr (see GSC filters). Thanks to @JohnMu for passing this on. pic.twitter.com/2pkr6gZ5vY

— Brodie Clark (@brodieseo) September 8, 2021

Google SERP Changes

Featured Snippet change

Brodie Clark observed a change in the way Google bolds text within featured snippets.

The bolding of text within the FS is not new. The change specifically comes from the way Google is displaying paragraph content in featured snippets by presenting the whole paragraph as supporting text and bolding key passages dependent on the searcher’s query.

This change is not something you can really optimize for so your approach should remain the same with regards to the FS. Brodie advises to pay attention to what Google is bolding in the FS for queries as it can be a helpful indicator in what Google is generally looking for through the portion of content displayed and what passages Google bolds in featured snippets.

Did you catch the recent update to Google's Featured Snippet results? It related to bolding of content in the snippet, allowing users to locate important text and answers faster. The same update actually applies to some PAA and KP results too. More info: https://t.co/mQ7wRit9AP pic.twitter.com/dR3tH177V6

— Brodie Clark (@brodieseo) September 1, 2021

MHC staff select their top tips and/or resources

Alec Brownscombe’s pick: JR Oakes’ handy Regex spreadsheet

One of my favourite tips comes courtesy of the SEO Tips section of our newsletter back in Episode 179. Not long after Google added regex filters to GSC, JR Oakes whipped up this super-handy spreadsheet that I continue to use frequently when in need of a quick lookup of Google click/impression/position/CTR data for a subsection of a site’s pages. JR’s spreadsheet allows you to paste in a list of URLs, which are then stripped down to their path and placed into a regex formula that you can then paste into GA or GSC’s new regex tool for URL filtering, helping us find the GSC or GA data for all of those URLs in short order.

This recently provided a shortcut for a site with a particularly chaotic site structure and a mess of near-duplicate content across different catalogues on their site. We were able to quickly verify our suspicion about a cross-section of near-duplicate pages: none were garnering any clicks, impressions, or position ranking of consequence in the past 16 months. We safely noindexed many of the near-duplicate pages, cleaning up the overall quality of the site’s indexed pages with zero traffic loss in the process.

Andrew Nguyen’s pick: The State of Link Building Report 2021 by Paddy Moogan and the Aira team

Aira’s annual state of link building report is always a favourite of mine. Every year they do a deep dive at the state of links and get insight from the best SEOs in the industry. It’s a myriad of experiences, opinions and insights from the best minds in SEO. The report covers so much in the realm of link building to tactics and techniques, to reporting, to agency and in-house SEO specific questions. Every section has insight from many industry experts as well giving their comments and thoughts on the questions asked! This is a huge data-driven report and provides so much information and insight on how the industry approaches link building.

We feel that links will always have a significant impact on rankings and it’s interesting to see how the industry is shifting from old trends to new ways of thinking when it comes to links!

Bre Baumken’s pick: content and page improvement ideas

In my 1.5 years at MHC, I’ve completed a lot of page comparisons and here are some of my most common page/content improvement recommendations I’ve made:

- Ask yourself whether your content answers the top 20 or so commonly asked questions in your industry. Analyze multiple competitor pages in search of content gaps and seek ways to meet all needs on your site through your content offering. Also, strive to rank in relevant People Also Ask (PAA) boxes.

- Take a look at how easy your pages are to skim and read. How quickly can your users navigate to key answers or key content within your current page structure? Ask yourself the following:

- Are you using an adequate amount of headings/subheadings allowing it to be skimmable?

- Are you using various stylistic elements to divide, organize and present content? Or, do your pages present as giant walls of text lacking in multimedia?

Callum Scott’s pick: Google’s ability to reassess your site

My favourite tip comes from Episode 197 where we reported on a fantastic chunk of insights from John Mueller that Glenn Gabe detailed in this Twitter thread.

The topic John was asked about was how long after removing low quality content from a website does it take for Google to reassess the quality of a site. This is very pertinent to myself and many of our clients experiencing traffic drops following core updates. A lot of these sites have a common issue in that they have plenty of good content on the site, but also have a lot of (often older) less high quality content.

We know that Google takes into account all indexed pages of a site when they are assessing site quality. As far back as 2017, John stated the following:

“From our point of view, our quality algorithms do look at the website overall, so they do look at everything that’s indexed.” – John Mueller, August 22, 2017

Frequently we have seen good results after either consolidating low quality content, or pruning it from the index. Doing this appears to have helped these sites improve the ranking potential of their higher quality pages.

This recent help hangout provided us with some helpful insight as it suggests that it can take 6 months or longer for Google to reevaluate a whole site. This is because it takes time for Googlebot to reindex a whole site and gather all the related quality signals about it.

So if it takes this long, how do you know if you are on the right track with all the changes you are making? Sometimes we have thought testing out the approach on a small subset of pages could be a good idea. However, John made clear in this hangout that testing on a small subset of pages is not going to be enough for Google to assess a site as higher quality.

Instead he suggests using metrics from Analytics to see things like the engagement rate, time users spend on pages, and how these metrics differ before and after making improvements. While this wouldn’t be something Google uses directly for Search, it can be an indicator of the fruits of your labour.

Cass Downton’s pick: using Analytics to find broken links

This tip goes back to Episode 132 and comes from Kristina Azarenko, the founder of MarketingSyrup. It’s a quick and easy way to use Google Analytics to find any pages that are linking to a 404 page on your site.

In Analytics, click on Behaviour > Site Content > All Pages, and then for primary destination, click on “Page Title”. This will show you the page titles for all the pages on your site which have received visitors. All you need to know is what title your 404 pages use, and utilize the “advanced” search bar to find them. So if your title contains “404” or “not found”, you can just search that and it will show you any 404 pages getting visitors.

Not only does this help you find 404 pages that are a high priority to fix, but you can see how much traffic that 404 page is getting, as well as which source is responsible for it.

Dylan Adamek’s pick: Jason Barnard describes exactly how to get a Knowledge Panel

Featured back in April’s Episode 178, Jason Barnard and his agency (KaliCube) came out with some amazing tools and content focused on Google’s Knowledge Graph. If you are a business or brand and want to establish yourself within Google’s Knowledge Graph, this article by Jason posted on Semrush is a must read. By keeping the information about your brand uniform across your website, your schema, and a number of external profiles, it can result in a knowledge panel that accurately represents your company. This not only protects you from showing potential visitors incorrect or outdated information, but over time it can increase your overall brand awareness.

Jamie Larock’s pick: prioritizing Core Web Vitals in the grand scheme of things

Every site owner wants to make sure their Core Web Vitals are top notch, and they tend to want it done yesterday. After all, CWV are part of a ranking factor now so urgency is understandable. But, is panic really necessary?

CWV are one of many items that make up the Page Experience signal and that’s one ranking factor within the pool of over 200 Google uses. Overall, the biggest benefit from CWV comes from transitioning from ‘poor’ or ‘needs improvement’ to ‘good’. The reality of it is, CWV is seemingly more of a lightweight factor at the moment and good content and relevance trumps page speed — even Google has said this! For example, Martin Splitt and John Mueller have both indicated that bad content will not rank better just because the page loads fast. If your basement is flooding, it’s not time to fix the squeaky door. Make sure you’re not blinded by the CWV frenzy and keep your priorities straight.

Matt Baker’s pick: internal linking opportunities

This fantastic internal linking guide from Kevin Indig is split in two components: a beginners section covering the basics and an advanced guide. It’ll help you recognize the importance of internal linking and also addresses some myths, misconceptions, and best practices you should be aware of. We really enjoy the section on ‘internal linking strategies’ which should help spark inspiration for your own site.

We mention this resource for two reasons:

- Some sites tend to do the basics well but struggle with the more sophisticated opportunities.

- Some sites we work with tend to create good value on their pages but fail to think about tactics to drive users deeper in the site (especially at the end of their post/article, for example). In other words, they’re unintentionally creating standalone value. Overall, we feel like it’s a huge missed opportunity if you’re doing this.

Opportunity-wise, consider all relevant link opportunities. Joshua Hardwick published an excellent guide on the Ahrefs blog (which we featured way back in episode 69) and Cyrus Shepard also had a solid Whiteboard Friday on the topic of maximizing internal links (featured back in episode 158) worth watching.

Sukhjit Matharu’s pick: which SEO task(s) moves the needle the most?

SEO improvement is typically not a one-item proposition! Great SEO encompasses a multitude of different elements and tasks and can sometimes even take the shape of using a “kitchen sink approach” (to borrow from Glenn Gabe). It’s quite rare for our team to come across a single item of concern and truth be told, the clients that attempt to comprehensively tackle a number of quality issues (as opposed to selectively focusing on one or two items) are often the ones who achieve the best results.

Uly Neves’ pick: links and site migrations

When migrating your website, there are a number of things to consider. One of the most important ones, in our opinion, is that of links. If you are migrating URLs, remember to update all internal links (from the retired site URL to the new site URL), which can be easily done with a 301 redirect. By redirecting your pages instead of shutting them down completely, you also have the chance to preserve any external links that were previously pointing to the old URL. Before you make a big mistake, definitely consider the link equity you’ve worked so hard to acquire.

Summar Bourada’s pick: don’t underestimate the power of resources!

One beautiful thing about the SEO community is how open everyone is to sharing, helping and learning from each other. As the non-SEO team member at MHC, I love something built for those of us who aren’t as knowledgeable in SEO and just want a little help. Aleyda Solis put together this resource for learning SEO that is absolutely amazing. It helps you steer in the right direction, continue learning and suggests where to go next. If you aren’t familiar with it, it’s such a great tool to keep bookmarked.

Such an amazing resource Aleyda has made. We strongly encourage you check it out!!! https://t.co/ZiXCqoLhbe

— Marie Haynes Consulting 🐼 (@mhc_inc) July 9, 2021

SEO Tips

Diving into internal linking

Another great Twitter thread this week from Will Critchlow regarding internal linking!

I wanted to talk this week about one of the most advanced SEO subjects there is: internal linking

— Will Critchlow (@willcritchlow) September 3, 2021

While the tweets breakdown the specific findings from tests conducted at SearchPilot, Will also shares some of the biggest takeaways:

- Unsurprisingly, internal links are shown to be incredibly important across many different tests.

- Sometimes the biggest benefits of internal linking are not to the page the links are pointing to but to the page where the internal links are located.

With this being said, does this mean you should go and add as many internal links to your pages as possible? No, it’s not recommended. PageRank is divided through the links on your page that are pointing to external pages. Moreover, the link authority algorithms can be incredibly complex. While the Twitter thread is rather long, there is a wealth of information found in the case studies embedded within the tweets. We recommend giving them a thorough read!

Prerendering: what you should know

Martin Splitt recently provided some brief but helpful comments about how Googlebot views prerendering. Andrew Cilio, asks if all website functionality needs to work in Googlebot’s eyes the same as it would with a user. Martin responds “Not for Google Search”. Andrew also asks “Testing and the content is in the DOM, but does not “work” when you go to open the hamburger menu for instance on pre-rendered version. Some things like “read more” and dropdowns do not work. Will this be an issue for indexing and/or ranking?” Martin says “No.”

What you need to know about HowTo Schema

Interested in using How-to Schema in your content?

I've been tracking this feature closely since rich result support on Google first started in May of 2019.

Here's 4 little-known facts about How-to Schema rich results with relation to SEO 👇

— Brodie Clark (@brodieseo) September 6, 2021

Good tips from Brodie, as always!

In addition to getting the overall implementation validated, double checking to ensure you have accurate jump-to links set in the how-to schema markup is a key box to check when optimizing your structured data. When clicked, the searcher is looking for more information on a specific step in the how-to process, so sending them looking for it again from the top of the page makes for a poor user experience. We corrected wonky jump links for a client’s page recently and immediately observed improved engagement on the page on mobile!

How Nintendo made their homepage twice as fast

This is a super interesting case study about how an ecommerce website developer company by the name of BlackBird cloned the HTML of Nintendo’s homepage with the objective of improving their site speed.

The objective of this study was to determine where another website’s pain points are in terms of speed which can hopefully be turned into a checklist of things to avoid and things to improve. Here are some of their findings for Nintendo:

- Lazy load images below the fold but not the ones above the fold (ATF). You want your ATF images to render as quickly as possible. Do lazy load images in the side panels.

- Say no to anti-flicker snippets. A/B testing softwares typically recommends anti-flicker snippets but BlackBird found that this actually slowed their homepage down significantly.

- The legacy Typekit CSS request is render-blocker which causes delays. BlackBird says remove it if you’re not using font files from Typekit.

- Self-host Google font files.

Even if you don’t have major page speed problems, it never hurts to improve in reference to Google’s Core Web Vitals as a ranking factor. Check out their article for much more information on their recommendations for Nintendo’s homepage.

Advice for presenting new content to Google

In this Twitter discussion, John Mueller approved of some advice/feedback given by Lyndon NA to a website owner who was claiming to have crawling issues with new content.

https://twitter.com/JohnMu/status/1435256146223419392

If you have new content that is not being crawled by Google for at least a few weeks, you need to showcase to Google why those pages are important. As we know, Google does not index every page in existence so you need to make every attempt to make it obvious to Google that your pages are of importance.

- Are you prominently displaying important site links? The website owner has included the link in the footer of the page and they seem to rotate out/disappear often. As such, it does not scream out that these pages are important.

- Are you using internal linking on your pages? The website owner had few internal links and as we know, internal links are incredibly powerful in communicating to Google which pages you find important.

- How strong is your internal linking? The website owner was found to have poor internal linking (out of the internal links which were present) which can easily cause pages to be buried and not given much weight.

In short, we would agree with Lyndon in that the signals the website owner is giving to Google are not screaming the importance of these new pages or content. If you want Google to crawl/index your pages and new content, you need to do everything you can to highlight what you consider to be important and valuable.

How to approach republishing content

Recently a Twitter user asked John if republishing your content on different platforms such as LinkedIn could negatively affect a site, as it could be seen as duplicate content. According to John, if the site owner’s goal is to reach a broader audience on different platforms, there should be no issue with this type of duplicate content. However, issues arise if site owners are trying to be the only ones to rank for a given subject, as syndicating content is not recommended by Google.

https://twitter.com/JohnMu/status/1434811726764068864

Google Help Hangout Tips

Google says you should avoid doing this

You can use the sameAs property to indicate the subject (or entity) of your page is the same as the one represented on a different site. For example, for a given brand, you can use sameAs to indicate their Wikipedia page, their social profiles, contact pages, etc. But what about including the URL to their knowledge graph ID (if one exists)? John Mueller answered this question in last week’s help hangout, and said that while you technically can do this, it is probably not a good idea. This is because the ID that Google uses could change over time, breaking that link. Knowledge Graph expert, Jason Barnard, weighed in on this topic on Twitter, and gave a few different examples of when a knowledge graph ID for an entity may end up changing: reconciliations, dupings (and de-dupings), things being lost and then recreated at a later time, or entities moving from one vertical to another.

Yes, I have many examples (including me !)

Three example cases

– Lost and re-created

– Duped (and then De-duped)

– moving from one vertical to anotherAll in all, pretty easy to "lose along the way"

…

— 𝄢 Jason Barnard 🇺🇦 (@jasonmbarnard) September 7, 2021

You really want to be using sameAs for URLs that are more stable, and as Jason says, nothing is more stable than a page on a domain you own:

But the most stable over time (if you are smart) is going to be the Entity Home (a page on a domain you own and control).

Establish that firmly in Google's mind and life gets much easier when communicating with Google about that entity 🙂

That's where I am headed now 🙂 pic.twitter.com/niKyaioPiW

— 𝄢 Jason Barnard 🇺🇦 (@jasonmbarnard) September 7, 2021

Heads up, this could lead to a manual action

In a recent help hangout, John was asked a question about recipe markups used on listicles and articles. The reason behind the question was that these listicles were showing up in the SERPs as rich results. This breaks Google’s general structured data guidelines. John said “That’s not something we should be showing rich results for. It could lead to a manual action, but might be smarter for Google to handle algorithmically”. As of now this is something not handled algorithmically, so if these guidelines are broken, it could be enough cause for Google’s Webspam team to give out a manual action.

Using recipe markup for articles or listicles & showing up in the recipe carousel? -> Via @johnmu: That's not something we should be showing rich results for. It could lead to a manual action, but might be smarter for Google to handle algorithmically: https://t.co/at4xOumxLe pic.twitter.com/x8G0eWn3Iz

— Glenn Gabe (@glenngabe) September 3, 2021

Other Interesting News

Bing content submission API now available

Bing, after two years, has finally released the public version of its API. Users are now able to send content (including HTML and images, for example) directly to Microsoft Bing. The now public feature can help site owners reach desired users, as well as reduce Bingbot crawling load.

Local SEO - Tips

Local link building tidbits

Thanks to Liz Linder for capturing these Local U link building tidbits! First off, Greg Gifford explains that local link building is more about building on hard-earned relationships. One unique element to local SEO is that the topic of nofollowed vs. followed links, Domain Authority (DA — a metric used by Moz), and relevant links are typically less of a concern.

🚫Local link builders don't care if a link is nofollow.

📈Local link builders don't care about domain authority.✅We build links based on real-world relationships.

These aren't always "pretty" links but they're harder for competitors to replicate.@GregGifford #localu

— Elizabeth Linder 🌻 (@Its_Liz_Linder) August 31, 2021

So what methods can you use to help build some good, natural links? Well, here’s a great place to start:

Local link building ideas:

✅Local sponsorships

✅Local charities

✅Local volunteer opportunities

✅Local meetups

✅Local clubs

✅Local business associations

✅Specific group directories

✅Neighbourhood watch websites

✅Use (city) inurl: on Google@GregGifford #localu— Elizabeth Linder 🌻 (@Its_Liz_Linder) August 31, 2021

Mastering local keyword research – a free course

Here at MHC we love learning, and this course is great for exploring local keyword research concepts. This course starts with fundamentals and dives deeper into advanced strategies. Here are a couple things you’ll learn in this course from Claire Carlile:

- Essential elements of local keyword research.

- What keyword types you should target.

- Building a keyword list.

- How to prioritize keywords.

There are 9 modules, 9 videos, and 3 quizzes that should take approximately 2 hours. If you’re a beginner who wants to learn more or are an expert who wants to have a nice refresher and learn new tips, this course covers it all.

Recommended Reading

How to Write an Author Bio: E-A-T, SEO Tips & Great Examples – Daniel Smullen

https://www.searchenginejournal.com/how-to-write-author-bios/417619/

September 1, 2021

We’re always excited to see articles surrounding the topic of E-A-T, and Daniel Smullen writes a great one discussing author bios. Specifically, why they matter and how to write an SEO optimized one. Here are just a few tips Daniel covers for writing SEO-friendly author bios:

- Write in the third person.

- Keep things short and concise.

- Include job title and function information.

- Include your expertise. Make sure you highlight the expertise and trustworthiness related to the topic being written about.

- Include social media profiles.

- Include a good photo.

- Let your personality shine through.

- Have separate URLs for author bio pages and make sure they’re indexed.

- Use structured data.

If you’re looking to implement author bios to your site, this article is a great resource with helpful examples to get you started.

Semantic Relevance of Keywords – Bill Slawski

https://www.seobythesea.com/2021/09/semantic-relevance-of-keywords/

September 3, 2021

This is a detailed breakdown (as always) by Bill Slawski on data processing as it pertains to semantic relevance of keywords. Bill explains in great detail how a data processing system can include a “semantic relationship graph” that determines the degree of the relationship between separate but related keywords as well as identify semantic conflicts. There is a lot of misinformation around “LSI keywords” in the SEO industry, but Bill’s invaluable work on the subject (here and in the past) helps separate truth from fiction. Loading a page with synonyms for the keyword you are trying to rank for does not make you more likely to rank for a specific term. To state the obvious, when optimizing a piece of content for a particular topic, you should always be researching and including the depth of language a searcher would come to expect and find useful, including relevant subtopics and related concepts.

What Does it Take to Rank in Google Discover? – Lily Ray

https://www.amsivedigital.com/insights/seo/what-does-it-take-to-rank-in-google-discover/

September 7, 2021

Kudos to our friend Lily Ray for this awesome write up on ranking in Google Discover! Lily gave this presentation at a DeepSEO Conference and you can view the full slide presentation at the end of the article. While we recommend reading the full article, we especially enjoyed these five tips Lily provides:

- Content that is found on Discover can be very emotional in nature and speaks to the interests of the user. Think about some of the headlines you’ve seen in Discover. In the example Lily provided, some of the topics that received the highest CTR had headings such as cheating scandals, celebrity meltdowns, celebrity deaths, scandalous celebrity photos, and relatable relationship advice.

- While Google clearly states that click-baity headlines should not rank well, the opposite actually is evident in Discover! Headlines which are clickbaity in nature actually appear to drive traffic. Approach this with caution as you can get a manual action for exaggerated misleading titles. The content served should essentially be what the user is expecting after reading the headline.

- Although Discover is part of organic traffic from Google, it behaves very differently! A better comparison to Discover would be a social media platform. Sometimes the content shown on Discover is that which the user didn’t even know they were interested in which can explain why some Discover content does well. It is also important to note that E-A-T signals may be calculated differently than organic search.

- Creating headlines in the form of questions can also yield a higher CTR and drive significant Discover traffic. While not all articles use this approach, those who did benefited.

- Content that performs best in Discover is that which addresses a specific niche interest of an audience. Think about highly specific content. Often these are areas which can drive tremendous amounts of traffic in Discover.

Jobs

Would you like my job?

I'm hiring my permanent replacement, as Head of SEO at the FTSE 100 home improvement group, @KingfisherPLC. I will take you under my wing, show you the ropes, give you my strategy, processes and contacts, then hand you the keys.https://t.co/2ev8hMl5bj

— Rob Kerry (@robkerry) September 7, 2021

For anyone who is interested https://t.co/SorSBNQJwB Please reach out with questions! Remote USA only. Salary depends on experience and location, but happy to give more details.

— Tessa Bonacci Nadik (@tessabonacci) September 7, 2021

Centerfield is hiring for not one, but two positions! Senior Technical SEO Manager, and Senior SEO Growth Strategist.