If you're looking to understand how Google ranks content for relevance and helpfulness, especially after recent updates like the March 2024 core update, this article breaks down Google's key signals: meaning, relevance, quality, usability, and context.

To improve your SEO, focus on creating original, high-quality content that deeply satisfies user intent, demonstrates expertise and authority (EEAT), and ensures your main content is easily accessible and valuable, moving beyond just keywords to prioritize user experience and genuine helpfulness.

I feel like I’ve studied this so much in the last few years. Yet today as I advised a client who was impacted during the March core update, the one that Google called an evolution in how they determine the helpfulness of content, I felt I needed to review once again how Google determines what is relevant and helpful. In doing so, this article popped out! And I got ideas to help my client. 🦾

The more we understand how Google determines which results are likely to be helpful and relevant to a searcher, the better we can do at improving our websites so that we are likely to be that result.

I used Deep Research for some ideas on understanding how Google determines relevancy and helpfulness

I asked all 3 of Grok, ChatGPT and Gemini’s Deep Research tools this prompt:

Do research to find me credible information on how Google determines relevance and helpfulness. I'm looking for google research studies, and official google documentation. I'm less interested in articles written by SEOs.

Grok pointed me to this 2018 article from Google I found quite interesting:

My notes:

- Thanks to advances in machine learning, Google can assess high quality content with greater accuracy.

- Neural embeddings (aka neural matching - or vector embeddings) help Google understand the underlying concepts that people are searching for without relying on specific words used.

- “So, how do you take advantage of intent signals? Search queries are a good place to start because a query’s phrasing can reveal a lot about a user’s intent.”

- “Brands that tap into those intent signals to anticipate customer needs and respond with relevant experiences are the ones that will be able to delight and inspire today’s increasingly sophisticated consumer.”

Grok also recommended Google’s announcement on BERT. Did you know that BERT is a language model? At the time it was announced I suspect most SEOs had never heard of a language model before. I hadn’t!

And of course, another very important document to read is Google’s Word 2 Vec paper from 2013 which explains the very beginnings of converting language to numerical vectors.

Gemini sent me to Google’s How Search Works Document. If you haven’t read it recently I’d encourage you to study it again!

I have read this several times, but for some reason, today this part stood out to me:

Signals.

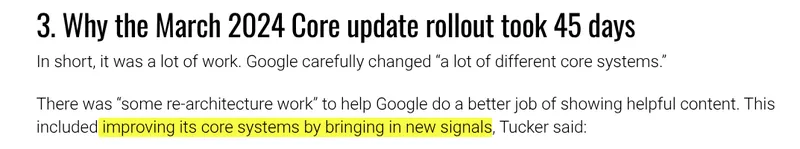

Google told us that with the March 2024 core update they brought in new signals to the core systems:

So now we know that the important signals are:

- meaning

- relevance

- quality

- usability

- context

The How Search Work document explains each of them in detail.

Meaning

They use language models to understand what the user really means when they type a query.

Relevance

The systems analyze content to assess whether it contains information that is relevant to what the searcher is looking for. Keywords in the headings or main body of text are important.

They use “aggregated and anonymized interaction data to assess whether search results are relevant to queries.” In the DOJ vs Google trial we learned that clicks are used to train Google’s systems. For more reading, I’d encourage you to read the Pandu Nayak testimony, or if you don’t have time to read the full doc, CTRL-F for “clicks”:

My understanding of how Google assesses relevance is that they use traditional ranking systems to initially rank results. Then they use vector search (the neural embeddings I mentioned earlier) to further narrow the results down to those that are likely to match user intent. And then, over time those neural embeddings are fine tuned based on what searchers actually chose to click on and engage with.

Machines make predictions. Then the actions of real people help improve the machines so they go on to make better predictions. I've written much more on this in my book, SEO in the Gemini Era - The Story of how AI changed Google Search.

I’ll share a bit more at the end of this article on how we can use an understanding of vector search to improve our content. However, I believe Google’s relevance determining techniques have advanced even further than simply using vector search.

In late 2024 Google published a paper called, “Its All Relative! -- A Synthetic Query Generation Approach for Improving Zero-Shot Relevance Prediction”. This was specific to training language models to understand what’s relevant, but I don’t see why techniques like this wouldn’t be used for Search as well. In this paper they gave an LLM a document and asked it to generate two queries for the document - one relevant, and one that is related but not actually relevant. By focusing on the difference between the two, the system was better able to understand what it is that makes something relevant.

Quality: Google says, “After identifying relevant content, our systems aim to prioritize those that seem most helpful. To do this they identify signals that can help determine which content demonstrates expertise, authoritativeness and trustworthiness. Did you know that in 2019 the Rater Guidelines replaced many of the instances of “E-A-T” with the words “Page Quality?”

One signal used here is links. “One of the factors used to determine quality is understanding if other prominent websites link or refer to the content.” I'd encourage you here to think less about links in the context of PageRank, and more about links being recommendations or votes from other well known sources in your field.

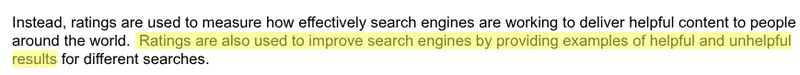

Google’s predictions of what is quality are also something that are fine-tuned over time - in this case, by the quality raters. “Aggregated feedback from our Search quality evaluation process helps to refine how our systems discern the quality of information.” Once again, machines predict which content is likely to be high quality and demonstrating the appropriate E-E-A-T expected for the query, and humans judge the results, helping the machines improve.

From the rater guidelines:

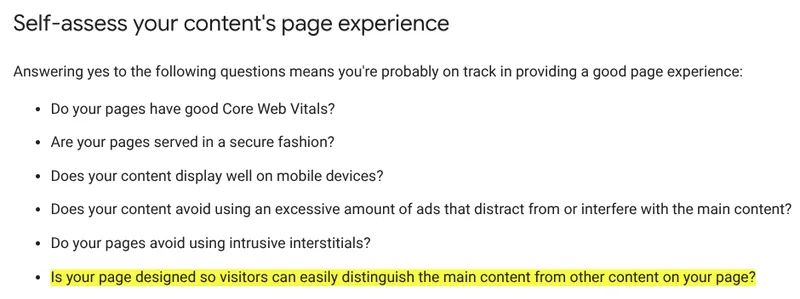

Usability: Usability is about page experience. You’re probably thinking about core web vitals and page speed, which is definitely a component of page experience. However, I think many people overlook this line:

Can users find the main content? The rater guidelines talk extensively about main content. It’s the part that helps the page achieve its purpose:

The most recent update of the QRG hints that Google wants their machine learned systems to go even further here in identifying whether there is too much filler content to distract from the main content.

Context: They use keywords along with the language used to search, the user’s location and even current events to add more context to the understanding of what the user wants.

ChatGPT’s Deep Research was excellent here. It goes into more detail on RankBrain, MUM and more. I’d encourage you to read it!

How can we use this information?

You’re likely reading this article because you want to know how to make it so that your content is what Google predicts to be the most likely to be helpful and reliable. I’ll share some thoughts on how to do that based on the information we’ve just learned.

However, there’s something important I need to say…and it’s awkward.

We are all guessing when it comes to trying to rank on Google.

There was a time when SEO was essentially a science. Do keyword research. Write good title tags. Make good use of headings. Get some links. Monitor, tweak and repeat. This simple strategy used to be quite effective.

Google’s systems have evolved immensely. Google started experimenting with turning language into numerical vectors twelve years ago. BERT radically changed how Search works seven years ago. And who knows what innovations Google is using in the last few years that they haven’t published information on!

So much of the SEO advice that we give and read is based on what used to work, or what we think should work, but really it’s all guessing.

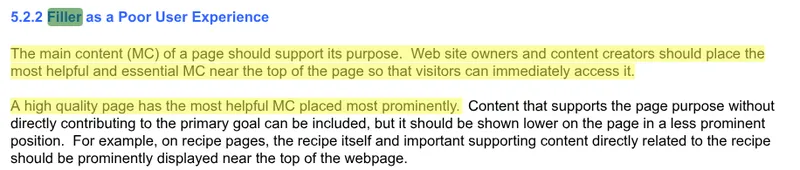

I’ve been experimenting with a few clients and have been able to improve keyword performance in some cases.

Those are nice looking graphs, but I have also had several cases where making changes to better meet and match user intent have made no difference at all. This is because there are many signals that Google uses to rank content. I have seen many cases of sites that used to rank well based on excellent SEO skills that have not been able to succeed at all since the March core update changed how Google ranks results. If you are lacking EEAT, I suspect that tweaking your text to look better to vector search will not do much.

With all of that in mind, here are some tips and thoughts from me:

Focus more on intent rather than keywords

You can take the top keywords that are sending traffic to your page and give them to an LLM (I prefer using Gemini Thinking Experimental 01-21 in AI Studio at the moment.)

Prompt: Here are the keywords bringing traffic to this page. And here is the content [paste it in]. What is the main purpose of this page? What are users trying to accomplish? How can I better structure this page or add to it so that it meets those needs?

Play around with cosine similarity…maybe?

Cosine similarity is a way to calculate how similar, or close, items in a vector space are. Google likely tokenizes your content, or in other words, turns it into vectors, when it is indexed. You can use Python to determine the cosine similarity of a query to a piece of content. If you tweak the content to improve the cosine similarity, you should, in theory, look better to vector search systems.

I’ve played with this. If you know even just a little coding, you can ask ChatGPT to guide you through writing code that finds the cosine similarity of a keyword to a piece of content. You can then ask for help in tweaking the content so it’s more likely to be similar to the keyword.

However, we need to keep in mind a few things. First, it’s unlikely Google is doing a simple check of cosine similarity between keywords and content. Google is likely doing much more with your keyword search to understand intent and match it to content that’s relevant. Also, as we discussed earlier, the vectors used for neural matching are fine-tuned, either by what Google has learned from monitoring how people click and engage across the web, and also by the ratings of the quality raters. (Note, it's not necessarily your site that's impacted if a rater assesses it. They're giving the machine learning systems examples that then help improve the systems overall.)

Looking good to vector search is one thing. But what’s more important is to truly have the content that is satisfying people more than your competitors and is high quality as described in the rater guidelines.

With that said, I've had some measure of success in using an understanding of cosine similarity to help some of my content rank in AI Overviews. One again though, this is a system that is continually changing so there's no repeatable method yet that I'm aware of for ranking in AIOs.

However, in some cases, my tweaks to look better in terms of cosine similarity pushed me into ranking in an AIO, and then, some time later those rankings were lost. There's more to ranking than just looking good to machines!

Pay attention to your main content

I have exercises in my QRG workbook that help you determine what your main content is and whether it is appropriately placed on your page. The title is also a part of the main content. For every page you have on your site, think, “What is the purpose of this page? How well does it satisfy a searcher who lands here?”

It can also help to compare your site against competitors. Do they do a better job of getting the searcher to the answer they are looking for?

Many sites impacted by the helpful content system and also the March 2024 core update had pages with loads of filler information the reader needed to get through in order to find the part that met their needs.

Do you lack EEAT?

I see so many website owners who are trying so hard to rank content that they do not have the expertise or experience to write. I recently was contacted by a site owner who has all sorts of documents on animal health issues. They’re well written and look helpful, but when you look at the search results you can see that they are dominated by authoritative pet health sites.

No amount of content rewriting and reorganizing will help this site rank.

EEAT is not usually something you can fix with on-page changes. If you think you might have an EEAT issue, I have homework for you. Read the rater guidelines and CTRL-F for “known for”. The word “reputation” is in the QRG 213 times. EEAT is not about what you say about yourself, but rather what the world says about you online.

Again, my QRG workbook has exercises you can work through to help assess your site for EEAT issues. It also has a link to a GPT I created with loads of my advice and thoughts on how to improve your reputation online.

But, in some cases, it may not be possible to improve EEAT.

Be truly original - in a way searchers will find to be helpful to meeting their intent

Gary Illyes from Google said at a recent event, “Originality is something we're going to be focusing on this year. That's going to be important.”

The first piece of advice Google gives in their guidance on helpful content is, “Does the content provide original information, reporting, research, or analysis?”

Once again, I have homework for you: Read the Rater Guidelines and CTRL-F for “Originality” and also “paraphrased.”

If you are going to publish a piece of content it needs to be something that adds to the body of knowledge that is on the web. It is no longer enough to publish something similar to competitors, but slightly better.

Ah! There it is. I knew that writing this report would help me in my advice to the site I’m reviewing that has been declining since the March Core Update. We’re going to brainstorm together on how they can add original content on their topic. I have so many ideas here.

Here’s a good prompt for you to try in your favourite LLM:

I have a website on the topic of [topic]. I’m looking for ideas to write on that have original information, reporting, research, or analysis.

Or you can use the helpful content brainstorming GPT in my QRG workbook. It’s so good! I'm off to use it now...

If you found this helpful, you'll also enjoy my community - The Search Bar. I'd love for you to join us!

This originally started out as a Marie's Notes entry, and turned into an article.

Comments are closed.