Google launched the disavow tool in October of 2012. The main purpose of the tool was to allow webmasters who had been given a manual unnatural links penalty an opportunity to ask Google to not count the bad links to their site. However, I think that Google has an ulterior motive when it comes to the use of this tool. And, if my theory is correct, then it is a brilliant plan on the part of Google which will allow them to rid the web of a large amount of spam.

The purpose of the disavow tool

You are likely already aware of the intended purpose of the disavow tool. If a webmaster has received a manual warning of unnatural links then Google wants them to jump through a number of hoops in order to get the penalty removed. A site owner must make thorough attempts to get these links removed, document these attempts and then request reconsideration by Google. In working with a number of sites to get their penalties removed I have found that the success rate in getting webmasters to remove links is usually dismally low. I would say that depending on the niche, I would get about 15%-20% of the links removed and sometimes less. The disavow tool is meant to be used to ask Google to "not count" the remaining unnatural links that are pointing to your site.

Patterns

As I work with more and more sites affected by a manual penalty, I am noticing a number of patterns. A lot of my work from day to day doing link audits is a little monotonous. Often, here's what I will see...

...Ah ok...this link comes from examplearticles.com. I know it's most likely spam but I need to gather contact info so I can attempt to get the link removed....[clicks on site]...ah, I recognize this layout. This has to be one of [name redacted]'s sites. And yup...sure enough there is no contact email on the site...and the "contact us" page is blank. So, let's check the whois info. Yup. Belongs to [redacted].

I see the same names popping up all over the place with every site that I have worked with for penalty removal. And these names never respond to email requests for link removal. Really...I feel badly for them! They're probably getting thousands of emails a month asking for links to be removed. So, what happens? Those sites all get added to my disavow.txt.

There are several sites that are on the disavow.txt file for every site I work with.

They are also being added to thousands of other peoples' disavow.txt files as well.

What would Google do with this information?

If I am noticing patterns after working through a handful of reconsideration requests, what kind of patterns are Google seeing? By this point they likely have tens of thousands of disavow.txt files that they have processed. It would be quite easy to take a look at the most commonly disavowed sites and determine which ones of these only exist for the purpose of creating self-made (i.e. unnatural) backlinks.

It would make sense to me that Google could simply devalue all of the links that come from those sites.

If this was to happen, there would likely have to be some manual input from Google. There are probably some domains that are improperly disavowed on a large scale such as blogspot.com and wordpress.com and it would be wrong to do a mass devaluation of links coming from those sites. But I could easily see it happening that, say, once a month Google produces a list of the most commonly disavowed sites and, if those sites have not been already evaluated, an employee could visit them and manually assess whether the site exists for backlink creation. If so, then, BOOM...that site doesn't pass any PR on to any of the sites it links to.

This may already be happening as I have noticed that a large number of the article sites that I visit in order to request link removal have had their toolbar PR stripped.

Is this connected to "crawl based link devaluation"?

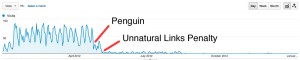

A site affected by Penguin or an unnatural links penalty will usually have a sudden drop in traffic.

I was inspired to write this post after reading Patrick Aloft's article entitled "Google Moves Towards Continual Crawl Based Link Devaluation". In this article, Patrick discusses how, in the last couple of months, many sites seem to be receiving a decline in traffic caused by devaluation of unnatural links. But, this devaluation is not like the typical Penguin type of traffic decline which would see a sudden decrease in traffic that coincides with the date of a Penguin refresh. Rather, the decline is a very gradual one.

Patrick's premise is that Google is moving to a process where they devalue links one by one as they find them during a crawl. I'm not so sure though. I think it's possible that this gradual devaluation is happening as one by one, sites that have been disavowed by a large number of webmasters, get stripped of their ability to pass on PR. As a result, the site in question gradually loses more and more link juice which results in a gradual decline of traffic.

What do you think?

This is just a theory. I would love to hear what you think. Is it possible that Google is using the data obtained from the disavow tool to devalue the links from a large number of sites?

Comments

” I think it’s possible that this gradual devaluation is happening as one by one, sites that have been disavowed by a large number of webmasters, get stripped of their ability to pass on PR. As a result, the site in question gradually loses more and more link juice which results in a gradual decline of traffic.”

That would account for the decline but a few week ago (I don’t have a link), JohnMu who moderates the Crawling, Indexing, and Ranking sub-forum of the Google Webmaster Forum (http://productforums.google.com/forum/#!category-topic/webmasters/crawling-indexing–ranking/D0zuFmHDB-I) was asked in a Google Webmaster hangout if Google uses disavow submissions as the basis for lowering the PR of sites, and he said no.

Another possibility that could account for the gradual decline in traffic (SearchMetrics measures what they call SEO visibility, but as that declines, so does traffic) is that this decline could be coming from competitor sites that are ranking higher because they’ve taken action in response to Penguin.

For example, let’s say there are only 2 sites in the world. Site A and B. Both are hit equally hard by Penguin, but A responds quickly and gradually improves its link profile. As this happens, site A starts ranking better (and therefore taking more and more of B’s traffic) and Searchmetrics detects this as gradually declining SEO visibility for site B. Could this account for what they’re seeing?

Note this does not change their basic conclusion, that Google is now able to re-evaluate sites almost continuously. It just that the improving sites are driving the effect rather than Google reducing the rankings of certain sites gradually week after week.

” I think it’s possible that this gradual devaluation is happening as one by one, sites that have been disavowed by a large number of webmasters, get stripped of their ability to pass on PR. As a result, the site in question gradually loses more and more link juice which results in a gradual decline of traffic.”

That would account for the decline but a few week ago (I don’t have a link), JohnMu who moderates the Crawling, Indexing, and Ranking sub-forum of the Google Webmaster Forum (http://productforums.google.com/forum/#!category-topic/webmasters/crawling-indexing–ranking/D0zuFmHDB-I) was asked in a Google Webmaster hangout if Google uses disavow submissions as the basis for lowering the PR of sites, and he said no. However, if for other reasons these sites are losing their PR, then your idea still accounts for the decline.

Another possibility that could account for the gradual decline in traffic (SearchMetrics measures what they call SEO visibility, but as that declines, so does traffic) is that this decline could be coming from competitor sites that are ranking higher because they’ve taken action in response to Penguin.

For example, let’s say there are only 2 sites in the world. Site A and B. Both are hit equally hard by Penguin, but A responds quickly and gradually improves its link profile. As this happens, site A starts ranking better (and therefore taking more and more of B’s traffic) and Searchmetrics detects this as gradually declining SEO visibility for site B. Could this account for what they’re seeing?

Note this does not change their basic conclusion, that Google is now able to re-evaluate sites almost continuously. It just that the improving sites are driving the effect rather than Google reducing the rankings of certain sites gradually week after week.

Hi Marie,

I would have to take issue with the notion that the Disavow Tool “is a

brilliant plan on the part of Google which will allow them to rid the

web of a large amount of spam.”

Unfortunately, Google’s Disavow Tool is basically just a work-around which allows all of the spam to remain exactly where it is! Add to that, the limitation of it providing data to inform the discounting of links to only one Search Engine and really, it is a poor substitute for actually cleaning up the problem.

This is exactly the reason we released the Webmaster portal at rmoov … to make it possible for link spam to actually BE removed.

To be honest, I think it is way past time for half-solutions and workarounds at all levels. If Disavowing links is to be the way forward for improving the quality of Search, it needs to be applied at the top level … across all search engines. Until there is a forward-thinking collaboration between all engines to achieve a solution like that, the only way to “rid the web of a large amount of spam” is to make it incredibly easy for webmasters to actually remove it.

Sha

Good points Sha. I like the idea of your new tool. If I were a webmaster who was getting piles of requests to remove spam links I would probably be using it!

I suppose it’s true that the disavow tool does nothing to keep this spam off of the web, but I do personally believe that it will be useful in getting a large number of these spammy sites out of the index.

Hi Marie,

I would have to take issue with the notion that the Disavow Tool “is a

brilliant plan on the part of Google which will allow them to rid the

web of a large amount of spam.”

Unfortunately, Google’s Disavow Tool is basically just a work-around which allows all of the spam to remain exactly where it is! Add to that, the limitation of it providing data to inform the discounting of links to only one Search Engine and really, it is a poor substitute for actually cleaning up the problem.

This is exactly the reason we released the Webmaster portal at rmoov … to make it possible for link spam to actually BE removed.

To be honest, I think it is way past time for half-solutions and workarounds at all levels. If Disavowing links is to be the way forward for improving the quality of Search, it needs to be applied at the top level … across all search engines. Until there is a forward-thinking collaboration between all engines to achieve a solution like that, the only way to “rid the web of a large amount of spam” is to make it incredibly easy for webmasters to actually remove it.

Sha

Good points Sha. I like the idea of your new tool. If I were a webmaster who was getting piles of requests to remove spam links I would probably be using it!

I suppose it’s true that the disavow tool does nothing to keep this spam off of the web, but I do personally believe that it will be useful in getting a large number of these spammy sites out of the index.

Hi Marie

I have a website which received a manual penalty on Google. Do you also offer manual penalty removal services?

We definitely do Marius and we’re quite good at it. I see you have just emailed me so I’ll respond there and we can see if we can help you get rid of your penalty.

Hi Marie

I have a website which received a manual penalty on Google. Do you also offer manual penalty removal services?

We definitely do Marius and we’re quite good at it. I see you have just emailed me so I’ll respond there and we can see if we can help you get rid of your penalty.