I had the privilege of being invited to Google I/O 2025. And boy, what an event!

I had the privilege of being invited to Google I/O 2025. And boy, what an event!

Google said that AI Mode is the start of a new era, with Search being transformed with Gemini 2.5 at its core. Search is changing dramatically.

I met Google CEO Sundar Pichai and sat in a fireside chat with Deep Mind CEO Demis Hassabis and Sergey Brin. I’ll share more on that talk in my next article and on my YouTube channel.

In this article, we’ll talk about AI Mode and other incredibly interesting changes coming to Search including Live Search, Personal Context, Gemini in Chrome, Agentic Search and some wild eCommerce changes.

We have a lot to learn!

AI Mode

Google called AI Mode a total reimagining of Search. It’s “Search transformed with Gemini 2.5 at its core.”

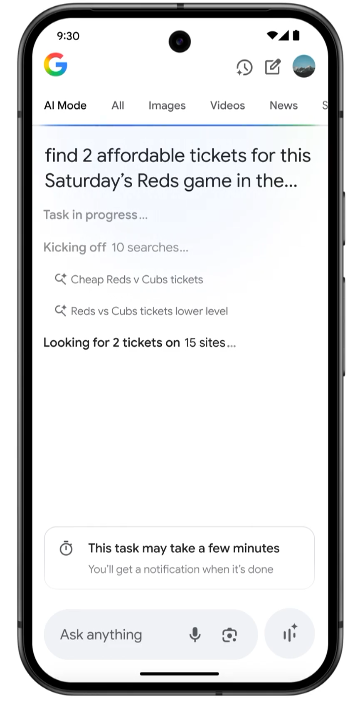

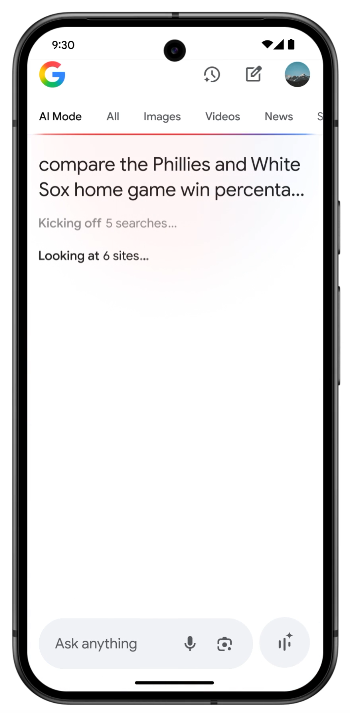

Important to know: AI Mode uses a query fan-out technique which means it breaks a query down into subtopics, searches on each, and then compiles an AI generated answer. It’s currently US only, and users need to click a tab or button to access it. Users can ask followup questions. AI Mode uses Gemini 2.5, Google’s most capable model.

AI Mode ranks results differently than traditional Search.

Look at this search on AI Mode. You can see it says, “Kicking off 1 search”, and that it searched 59 sites to generate this answer. It is extremely comprehensive. Not all of the 59 websites are linked to. Only 4 are in the carousel.

AI Mode is not currently reported on in GSC, but it sounds like we will get some type of reporting on it. At the moment, visits from AI Mode get sent as “no-referrer” but John Mueller says this is a bug. We will get some sort of indication in GSC of how we are appearing in AI Mode.

Marie’s thoughts: There’s a good chance AI Mode will replace traditional Search. I believe this has been Google’s Goal from the beginning. We will need to thoroughly study how it decides which websites to show. Also, we need to keep in mind that over time, people will start seeing personalized results in AI Mode (more on this soon), so tracking your presence here will be difficult.

I did find it encouraging that Google said AI Mode aims to show you, “links to content and creators you may not have previously discovered.” I do believe that truly original and insightful content can be surfaced here even without obvious brand recognition.

Additional Learning:

Expanding AI Overviews and introducing AI Mode - Google blog.

AI Mode ranks sites differently than traditional Search. It uses a “query fan-out” technique. Marie Haynes.

Study what the quality rater guidelines say about reputation and being known for your topics. This is likely one key component to ranking in AI Mode.

Google guidance on creating helpful content.

Gemini Live

Gemini Live allows you to point your phone or AI glasses or XR (extended reality) headset at anything, ask questions and search via AI Mode.

Important to Know: Gemini Live has the potential to radically change how we search. It is coming to Search this summer.

Marie’s Thoughts: I demoed “Gemini Live” at I/O. I pointed the camera of my phone at a display provided by Google with items on a bookshelf. The Googler suggested I ask, “Which of these books would be a good summer read?” She said the system would likely first search for “what is a summer read” and then would use the camera to see which books were on the shelf, search for information on those, and then put an answer together for me. Apparently I should read “Thinking Fast and Slow” this summer!

Live Search with Gemini Live will radically change how we can search for information.

In Google’s blog post on doing well in AI experiences on Search they emphasized using high quality images and videos in our content. I’d encourage you to look at the sites AI mode is surfacing and see if you can find evidence of them using unique and helpful multimodal content.

Additional Learning:

Demos from Google on Gemini Live

Top ways to ensure your content performs well in Google’s AI experiences on Search

See it, solve it: Gemini Live's camera and screen sharing is now on Android and iOS for free

Mike King found what is likely a patent behind AI Mode. I can’t wait to dig into this

Gemini in Glasses and XR (Extended Reality)

I got to demo the glasses at I/O. So, very, very cool.

We weren’t allowed to shoot video, but here I am with the prototype of the glasses on. When these come to market, they’ll be made by Gentle Monster and Warby Parker, so they’ll look much nicer.

Gemini speaks to you via the arm of the glasses, and also you get a display that appears within your glasses. It is super intuitive. I didn’t see it at first, and then, once I refocused my eyes, it was super clear and easy to read.

I need prescription lenses, but the Project Astra team was able to take my glasses, scan them and then put a clear insert inside of the prototype. Very cool.

Eventually you will be able to have Gemini display Google maps for you and give you walking directions on the ground.

By the way, the first person from Google I spoke with at I/O worked on maps. We had an interesting talk. IYKYK. 😛

I don’t know how we prepare for this new way of searching, other than to work perhaps on having good imagery on our sites and thinking about the new types of questions people will start asking. Meta’s Mark Zuckerberg has predicted that glasses will replace mobile phones. Perhaps one day our grandchildren will marvel at how we all walked around staring at a small device in our hands.

Personal Context

Personal Context in AI Mode will offer personalized suggestions based on your past searches, Gmail and other Google products. This is for opted-in users only.

For example, if you search for “things to do in Nashville this weekend with friends, we’re big foodies who like music”, AI Mode can show you restaurants with outdoor seating based on your past restaurant bookings and searches.

Important to know: Personalized searches will make keyword tracking difficult. My search results will be different from yours.

Marie’s Thoughts: When I heard of personalized context, I immediately thought of this line from Google’s Helpful Content Documentation: “Is this the sort of page you'd want to bookmark, share with a friend, or recommend?” It will become increasingly more important to develop a loyal following and to create things that make people want to revisit your website because it is so helpful. We do not yet know to what extent personalized context will be used in shaping Google’s search results.

Additional Learning:

Personal context in AI Mode, for tailored results. Google Blog.

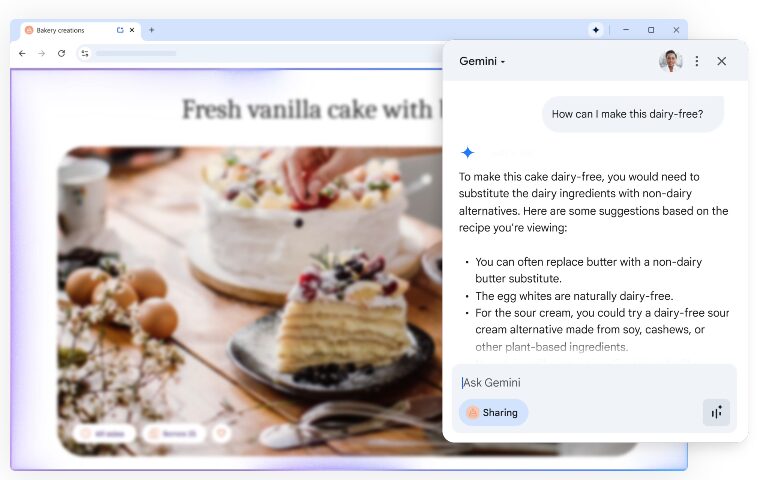

Gemini in Chrome

Soon, Gemini will be available for use on any page in the Chrome browser. You can use it to summarize pages and more.

(thanks Glenn Gabe for the above image)

Important to know. Gemini in Chrome will let you turn any piece of content into something personal for you. The obvious use of Gemini in Chrome is to have it summarize content. You can use Gemini live to talk with Gemini about any page you are on. Google gave these examples:

- On a page about ideas for family game night, you can use Gemini to ask, “which of these games are best for large groups?”

- You can ask Gemini to simplify a complicated topic discussed in an article.

- You can ask for modifications to recipes.

- Get Gemini to summarize reviews on a page.

- You can ask Gemini to create a practice quiz on the content you see.

Gemini in Chrome is rolling out this week for US English Chrome users on Windows and macOS. Google says, “In the future, Gemini will be able to work across multiple tabs and navigate websites on your behalf.”

Marie’s Thoughts: I don’t know if we will have any reporting on whether or how people are using Gemini in Chrome with our websites. I feel like this will change how we absorb content and probably change how we write it. For example, if I’m writing a post about assessing a traffic drop, I might include suggestions for those who have Gemini in Chrome on what to prompt, such as, “Ask Gemini in Chrome to customize this method for your site.”

Additional Learning:

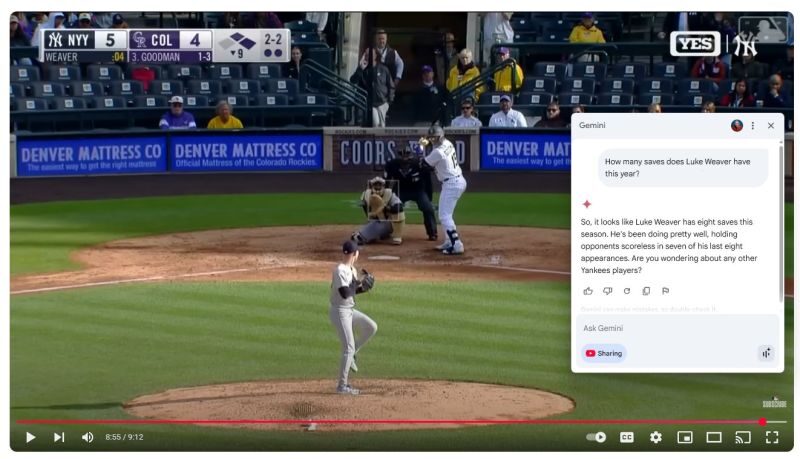

Project Mariner / Agent Mode

Project Mariner will use AI to accomplish tasks for you in your browser. This is a big deal.

Important to know. Project Mariner will do tasks for you in up to ten browser windows at a time. It will have a feature called “teach and repeat”. It is currently only available in the US for subscribers of the new $249.00 Ultra Plan, but will be available more broadly this summer.

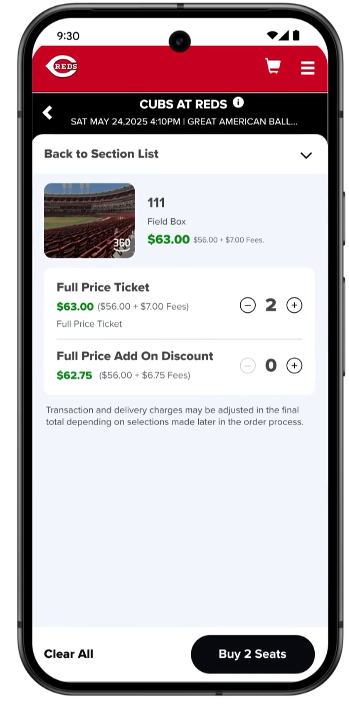

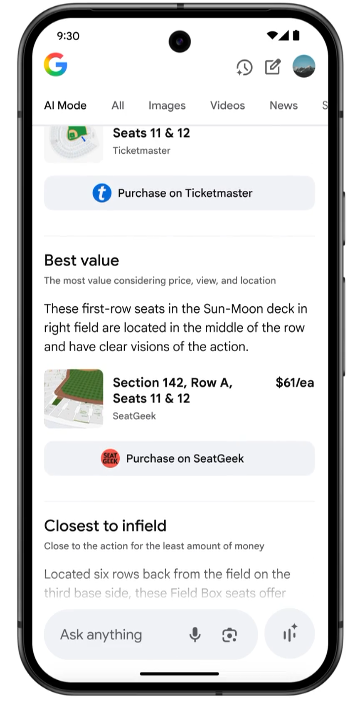

Some features of Project Mariner will be available within AI mode in “Agent Mode”. The example given was purchasing tickets for a sports event.

We’ll see this first for sports events, restaurant reservations and local appointments. Google is working with companies like Ticketmaster, StubHub, Resy and Vagaro. They did not share how other providers can be added as sources for this agent to draw from.

Marie’s Thoughts: I demoed Project Mariner at Google I/O and boy does it have a lot of potential. The Google rep agreed that I should be able to do what I want to do with it: Take my newsletter and publish it on my website, then go to Convertkit and prepare an email for me to send out, then go to my social media profiles and share about it. He said that essentially anything I can do in a browser, I can teach Project Mariner to do for me. We will be able to teach it “repeatable tasks” for it to remember and do when needed.

I imagine Project Mariner will be incredibly useful for client work. I wonder if it will do searches in AI mode and report back on “rankings” for us?

Agent Mode uses MCP (Model Context Protocol) to access agents on the web. Here’s a bunch more on MCP in the Search Bar.

I predict that eventually our personal agents will be doing more browsing of the web than we do. Here’s more on how Google’s Agent2Agent protocol is set to radically change how the web works.

Additional reading:

Agentic capabilities, to do the work for you. Google blog.

Google is bringing an “Agent Mode” to the Gemini app. The Verge.

Custom charts and graphs, for visualizing data

This AI Mode feature will analyze complex datasets and create graphics for you.

Important to know. You can ask questions in AI Mode and Google will use AI to bring together data from multiple sources and generate visualizations for you.

Marie’s Thoughts: These custom visualizations look quite helpful for searchers, but I fear they will be detrimental to many websites. For example, let’s say I’m searching for the best credit cards for me or the best player to put in my QB spot for fantasy football. Normally those searches would end up on websites. Now, I’ll have very helpful information right in the Search results, collated for me, made in chart form, and available for me to ask questions about.

I can see this being exceptionally helpful when combined with personalized results.

Additional reading:

Custom charts and graphs, for visualizing data. Google blog.

Deep Search in AI Mode

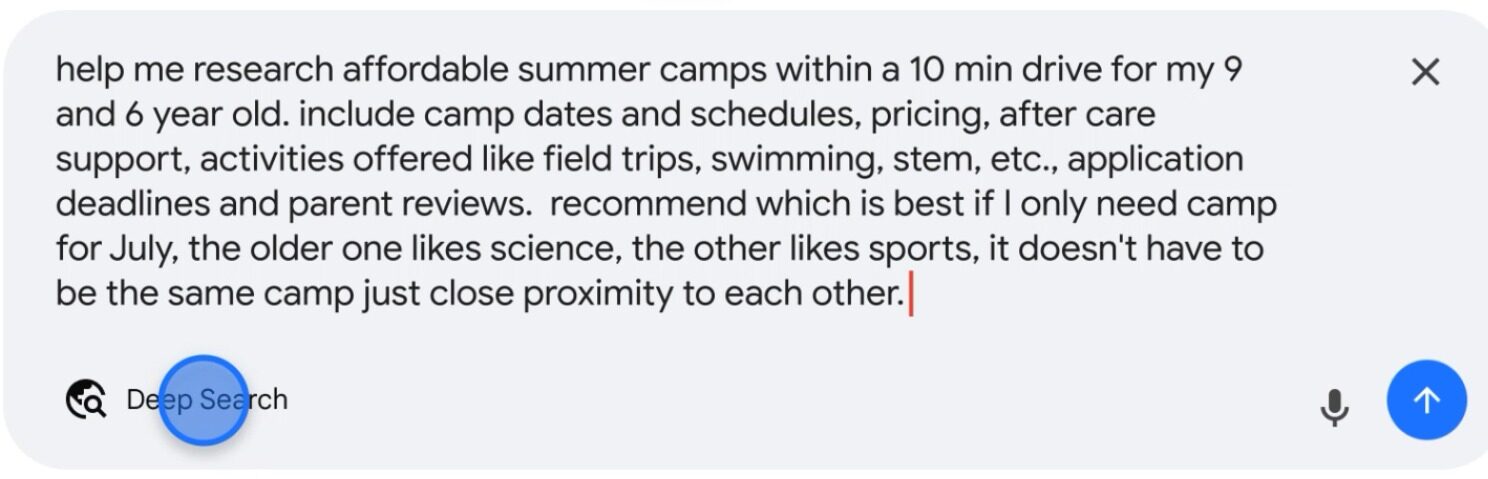

You have likely heard of Deep Research in the paid products for ChatGPT and Gemini. Soon, a similar product called Deep Search will be coming to AI Mode, right within the Search results.

Important to know: Deep Search uses the same query fan-out technique as AI Mode, but takes it to the next level. It can issue hundreds of searches, reason across disparate pieces of information, and create an expert-level fully-cited report in just minutes. This really does sound very similar to Deep Research, except it is available from AI Mode in Search.

Marie’s Thoughts:

Deep Research in both Gemini and ChatGPT is extremely useful. I find myself using it for personal searches - like finding a good birthday present, for business searches like, “find me examples of businesses who are doing this thing I want to do to give me insight into how to do it and what I can charge,” or for client searches like, “Research the reputation of [client]. What are they known for? What potential reputation concerns do they have and how can they improve?”

In some cases I think it will be good to be mentioned in Deep Search. When I was deep researching how to make “worm tea” from our compost’s worm castings, I read through every site linked to in Gemini’s deep research. However, in many cases, I think searchers will not be likely to click through.

Additional Learning:

Deep Search in AI Mode, to help you research. Google Blog.

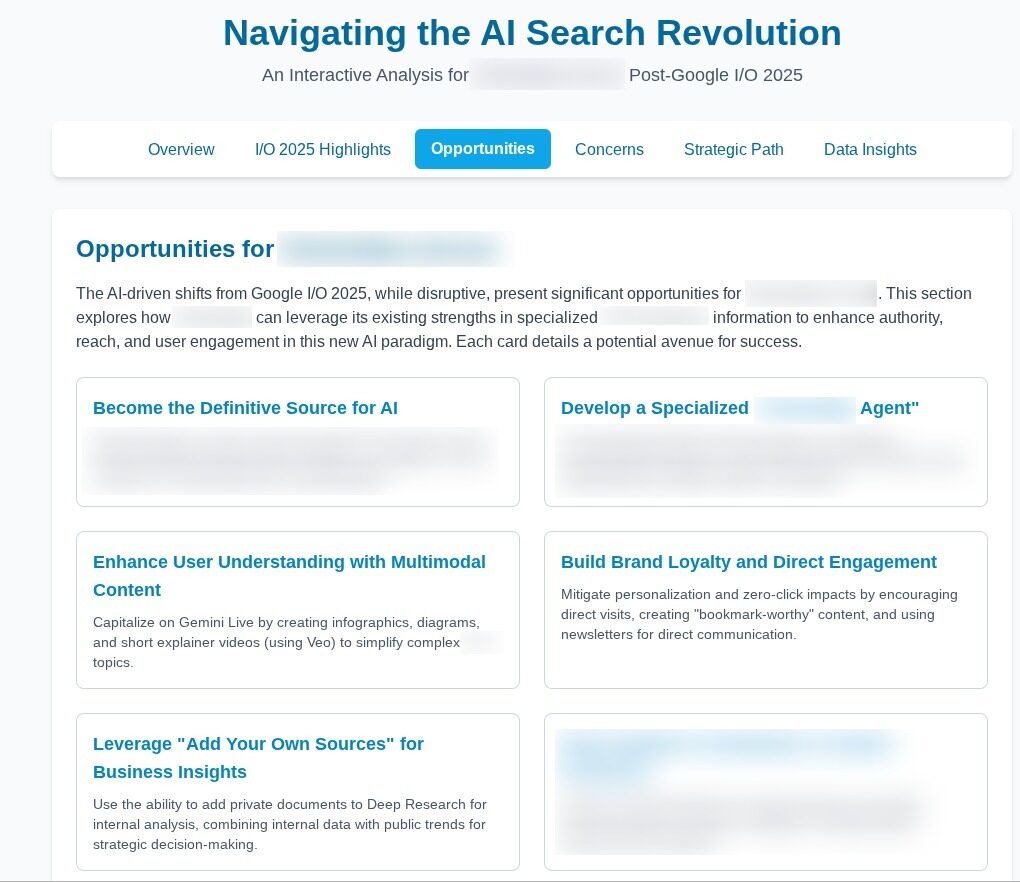

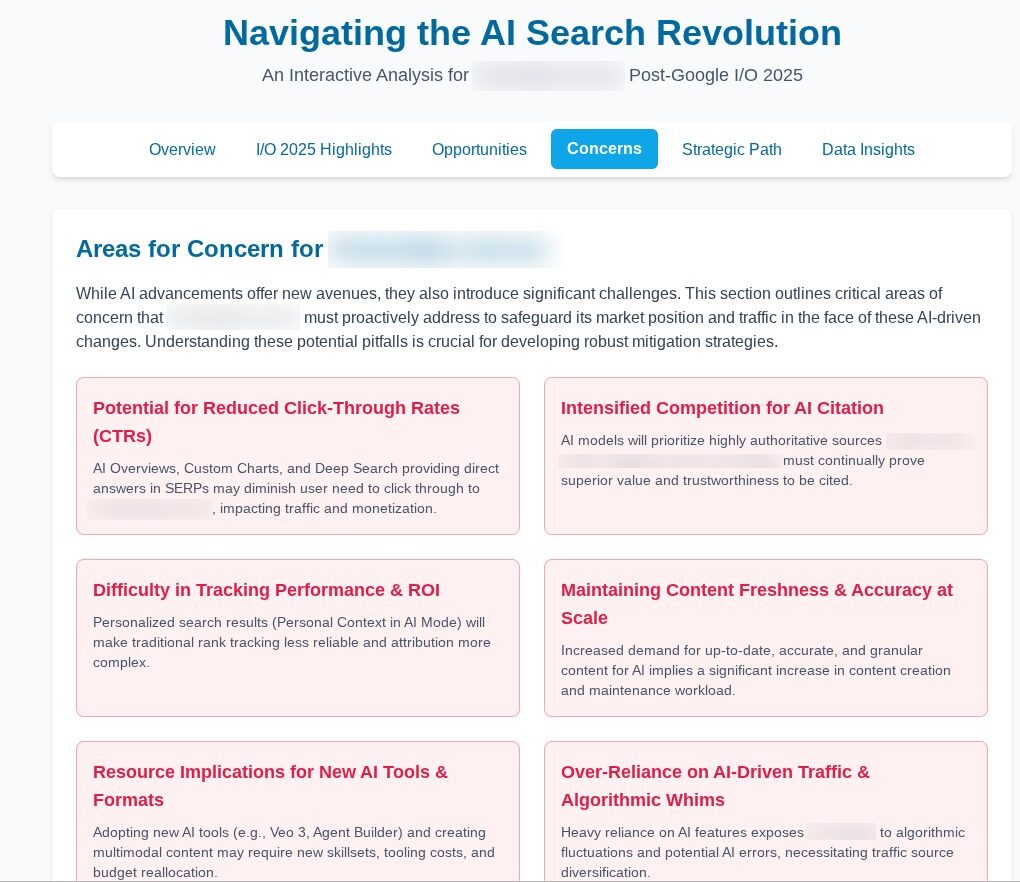

Add your own sources to Deep Research

Gemini’s Deep Research is so good. Now you can add your own documents from Google Drive to a Deep Research report.

Important to know: You can now make customized deep research reports with Deep Research that combines public data with your own private PDFs, documents and images. Eventually we will be able to pull information in from Gmail as well.

Marie’s Thoughts: There is so much we can do with this! Google suggests we take internal sales figures in PDFs and ask Deep Research to cross-reference with public market trends. Or, an academic can pull in hard to find journal articles to enrich their literature review.

After writing this article, I gave it to Gemini along with a few monthly reports for one of my clients. I then asked for a Deep Research report particular to this client to help them know how the changes at I/O could impact them. It made a thorough document which was a little verbose. I will need to experiment with better prompts. And then it suggested we make an interactive website out of this data. It is now on a special url on my site for this client to see. Also, with one click I made a podcast to explain the changes to this client as well.

This was done with a quick prompt. I will refine this and make even better client reports from it.

Once we have Deep Research in Gmail, I can see all sorts of possibilities.

Additional learning:

Get deeper insights: now add your own sources to Deep Research.

eCommerce

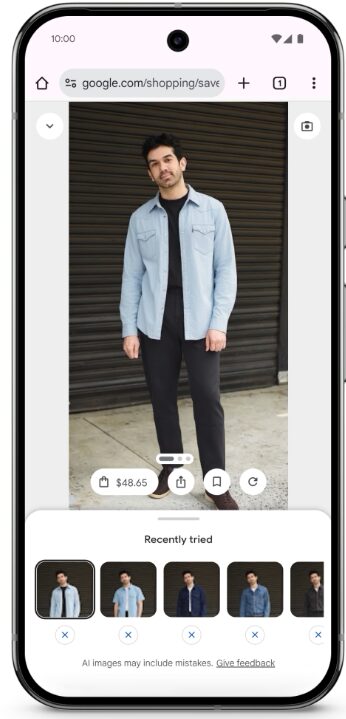

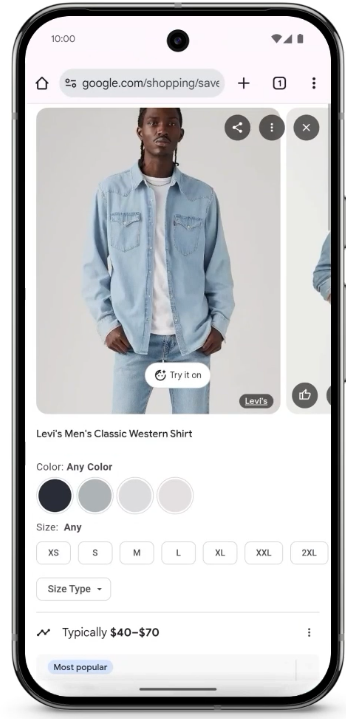

Try it On

Google’s new Try it On will change how we shop for clothing and encourage more people to buy online.

Important to know: You will be able to give an image of yourself from your camera roll to Gemini and it will show you what the clothing from a website looks like on you.

This feature is powered by the Shopping Graph (Google’s knowledge graph for products) and is powered by a custom image generation model that understands the human body and nuances of clothing.

You can test this feature in Search Labs. It’s only available in the US right now. The service is available to any merchant with a shopping feed who is eligible to show free listings.

Additional reading:

Here’s how to use Google’s new “try it on” feature. Google blog.

If you are a clothing merchant, check out the requirements here.

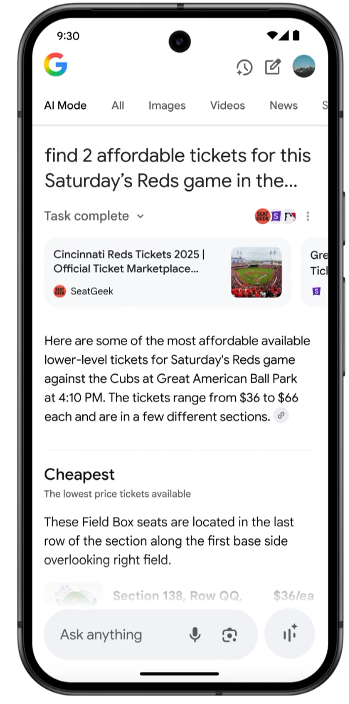

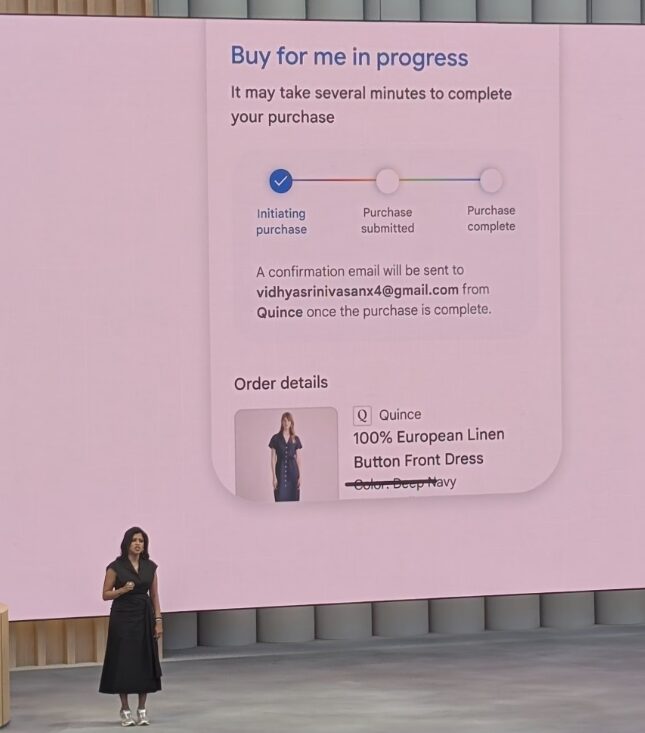

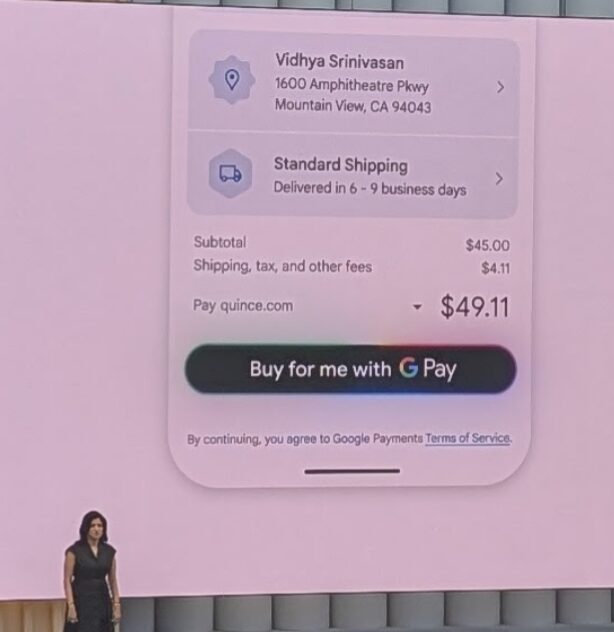

Agentic Checkout: Buy for me with Google Pay + Price tracking

Woh. This has the potential to significantly change how people shop online.

Important to know. Google introduced “agentic checkout.” Once you’ve found a product you want to buy, you can tap on “track price” on any product listing. You can set the size, color, or whatever options you want and your buying agent will keep an eye on the product for price drop notifications.

When this happens, Google will add the item to your cart and check out on your behalf with Google Pay.

This is rolling out in the coming months to product listings in the US.

Marie’s Thoughts: There are significant implications that come with this feature. Merchants will compete on price. I suppose that’s a good thing for purchasers?

Many of the things we optimize for as SEO’s such as improving forms, optimizing for adding to cart and more will mean very little compared to simply having products at the lowest price with the best shipping and refunds policy.

I can picture this feature being very popular when combined with Live Search. Hey Gemini…what’s this bug on my plant. Hey Marie…looks like you have aphids. Want me to find a good product for you? Gemini shops for me and chooses the product that is a good price with the fastest shipping. And I didn’t even touch my computer or phone. Speaking of which…let’s talk about Wing.

Wing delivery

Google only mentioned “Wing” in discussing how they helped Walmart deliver supplies for disaster relief. However, it’s not hard to imagine that one day we could have home delivery via Wing. At I/O I had a “pie in the sky”, a delicious fruit tart that was delivered to the event via drone. Wild. We are living in the future.

Veo 3

Google’s video generation, Veo, is incredible. With Veo 3, you can now add voices and sound effects. You can also use Google Lyria to add unique music.

Important to know: Veo 3 is almost good enough to convince people it’s real. We are entering an age where it will be hard to know what’s real! Google introduced Flow, a video editor for Veo, but you’ll need a paid Google AI account to use it. For now you need the super expensive Ultra plan that is $250/m.

Marie’s Thoughts: Veo 3 will revolutionize how content is created online. I attended a fireside chat with Demis Hassabis and filmmaker Darren Aronofsky in which he shared how Veo was allowing them to be incredibly creative.

This entire video, including the sound effects and voices was made with Veo 3.

Additional Learning:

Fuel your creativity with new generative media models and tools. Google blog.

Darren Aronofsky and Demis Hassabis on storytelling in the age of AI.

New AI Plans

Now on top of the $19.99 Google AI Pro plan there is a $249.99 Google AI Ultra plan. I know that seems ludicrously expensive. However, Project Mariner is only available on Ultra right now. Once I am able to use Project Mariner, I can easily see it saving me many hours each month, making it well worth the money.

The Ultra plan has the highest access to the latest models, to Flow, the video making tool, to Veo3 (with sound) and Project Mariner.

The Ultra plan is only available in the US for now, but other countries will be added soon. I will be signing up as soon as it is ready in Canada.

Additional reading: https://gemini.google/subscriptions/

More interesting things to know from I/O

Google Beam (formerly project Starline) uses AI and light to make people look 3D for video calls. .

We will be able to do live translation with Google Beam and also in Google Meet.

Google showed off a new type of model, Gemini Diffusion. Instead of generative text one token at a time, it generates outputs by refining noise step by step. It is incredibly fast, 5x faster than what we have today. You can use this link to join the waitlist.

Jules is a new development agent that connects with your codebase and accomplishes tasks with direct export to GitHub. It looks quite good!

Gemini Pro Deep Think is an advanced reasoning model that uses Gemini 2.5 Pro. It uses parallel thinking techniques resulting in incredible performance.

Google is working on creating a full world model. They say it is a critical step in becoming a universal AI assistant, and that this is their ultimate vision for Gemini.

Lyria 2 delivers high-fidelity music and professional grade audio from a prompt. Join the waiting list here.

Stitch lets you create an app with a prompt. Once done, you can copy your designs to Figma. I’d encourage you to try this out!

Google’s vision for building a universal AI assistant

It was such an honour to be in the same room as Demis Hassabis, co-founder of Google DeepMind, and Sergy Brin, co-founder of Google as they shared their vision for the future. I will be creating more content on what I learned from their conversation.

I wanted to end this blog post with a link to Google’s blog post on their vision for building a universal AI assistant. This new era that we have entered this week is taking us towards Google’s ultimate goal of becoming a universal assistant.

Google is not alone in this venture. Also this week, OpenAI announced a partnership with a company called …can you believe it…”io”. Jony Ive, the man who designed the iPhone and the MacBook Pro will be working alongside Sam Altman and team to create new devices that completely reimagine how we use computers and become our personal companions and assistants:

We have some interesting times ahead!

Marie

Exercises for Search Bar Pro Members

This week there is a special edition of Marie’s Notes with exercises to help you learn new skills we will need as AI changes Search:

- Study AI Mode (including a spreadsheet, instructions and prompts to use)

- Prepare for Live Search

- Prepare for Personalized Search

- Experiment with Deep Research

- Play with Veo

- Digging in to the AI Mode patent discovered by Mike King

If you are serious about learning about AI as it changes the world, we want you to join us in the Search Bar Pro area! Your $42/m ($480/year) membership includes tips, strategies and prompts from me, Marie's Notes, community discussion, tutorials and twice monthly Search Bar Happy Hour meetings with me as we help each other learn and succeed with AI.

Got questions?

Join me, Thursday May 29, 2025 at noon EST for a free live podcast episode where we'll talk about all of the things discussed in this article.

Leave a Reply