During Mozcon 2015, I sat in at the Google Penalty birds of a feather table and had some great conversations on penalties and algo changes. I was interested when Michael Cottam shared a story with us. He said that a client of his was doing some work on a Penguin hit site. When they used the fetch and render feature of Google Search Console and resubmitted a page to the index, they saw a dramatic increase in rankings for that page. That increase was short lived, however, lasting only about 15 minutes.

Still, my thought was that if this worked for other sites, it might be a good test that we could use to help people determine whether their site was being affected by an algorithm such as Panda or Penguin.

I set up some experiments

I’m going to give away the ending of the story early. Unfortunately this did not work for me. I feel it’s important to share though in case this failed experiment helps someone who is considering doing this in the future.

Method

I contacted 11 site owners with whom I had consulted in the past. Each of these businesses are eagerly awaiting either a Panda refresh or Penguin refresh. I asked each of them if I could have permission to use fetch as Googlebot to fetch and render a page and then submit it to the index. All of them eagerly agreed.

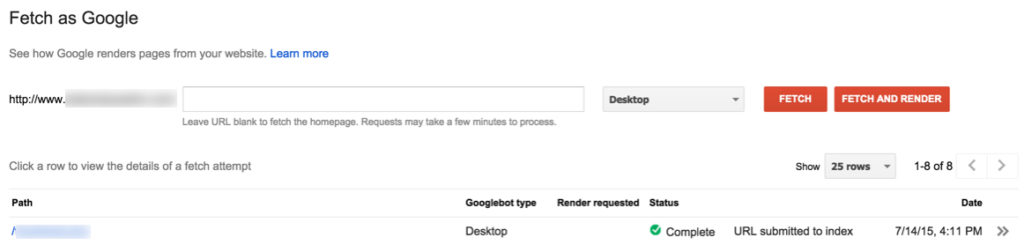

For each site, I found a search term for which they really should be ranking well, but they had very poor rankings for. I did a non-personalized, incognito search and took a screenshot of the results page containing their listing. Then, I asked Google to fetch and render the page and submit it to the index:

For half of the sites I did “fetch and render” and then submit. For the other half I simply did a fetch and then submit. For some sites I used the home page, and for others an inner page.

Then, after each submission, I checked rankings several times over the following 30 minutes. Rankings were checked via an incognito non-personalized search.

Results

Sadly, nothing changed. I had one site where the results flipped continually from the top of page 4 to the bottom of page 3, which I think was not related to my url submission at all.

Conclusions

Well, it was worth a try. 🙂 I decided to publish this article even though the results did not support my hypothesis.

It’s possible that Michael’s clients were seeing personalized results. It’s also possible that this was a bug and Google has fixed it. It's conceivably possible that some of these sites are not actually under algorithmic demotion, but a couple of them have been confirmed as being Penguin or Panda hit by Google webspam team members.

I would love to hear your thoughts in the comments section. Is there anything I could have done differently to replicate Michael’s clients findings?

Comments

Thanks for doing the detailed testing on this, Marie. I’m wondering also if perhaps this WAS working a while ago simply because of the timing of some of the internal data movement processes in Webmaster Tools and the core index, and those have either been made more real-time, or that changes in WMT don’t have any immediate effect on the index any longer.

Thanks for commenting Michael. I’m thinking this was some kind of a glitch and then Google caught on to the process and squelched it. It was worth a try though!