Key Takeaways

- Google Relies on User Signals: Google's ranking systems heavily depend on user interactions (clicks, engagement) rather than deeply understanding content itself.

- Bias Towards Known Brands: User behavior favors established brands, potentially overlooking better but less-known sources.

- Accuracy Isn't Directly Measured: Current systems use proxies like E-E-A-T but don't inherently verify factual accuracy.

- Need for Unique Value/Information Gain: Less-known sites need to offer clearly superior or different value to overcome brand bias and content similarity.

Why is this page ranking poorly behind one with less helpful / accurate information?

We’ve been digging into the treasure trove of information given to us in the DOJ vs Google trial exhibits and testimonies to understand more about how Search works. (The afternoon of day 24 is the Pandu Nayak testimony that I can’t stop reading and re-reading.)

In this article I want to share my thoughts on Google’s understanding (or really, lack of understanding) of the accuracy of our content. We'll look at a page from WalletHub.com that is faring poorly in Google's systems despite potentially having more accurate and helpful information. I'll share my thoughts on why this is happening. And why I think Google will possibly get better at understanding the accuracy of content on pages.

Let’s start by looking at this fascinating trial exhibit, a Google slide presentation from December of 2016 called Q4 Search All Hands.

It tells us how Google doesn’t understand documents, but rather they fake understanding by studying the actions of searchers.

Google fakes understanding by studying the actions of searchers

They then go on to explain how search works: (bolding added by me)

“Let’s start with some background…

A billion times a day, people ask us to find documents relevant to a query.

What’s crazy is that we don’t actually understand documents. Beyond some basic stuff we hardly look at documents. We look at people. If a document gets a positive reaction, we figure it is good. If the reaction is negative, it is probably bad.

Grossly simplified, this is the source of Google’s magic.”

Wow.

Positive and negative reactions

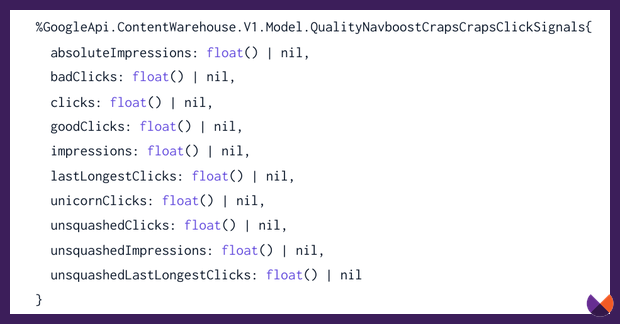

We’ve been learning about Google’s Navboost system. We looked at some of the attributes this system uses including goodClicks and badClicks.

This system stores every query searched and memorizes how the searcher interacted. It’s trying to understand whether a search was satisfied so it can predict even more satisfying search results.

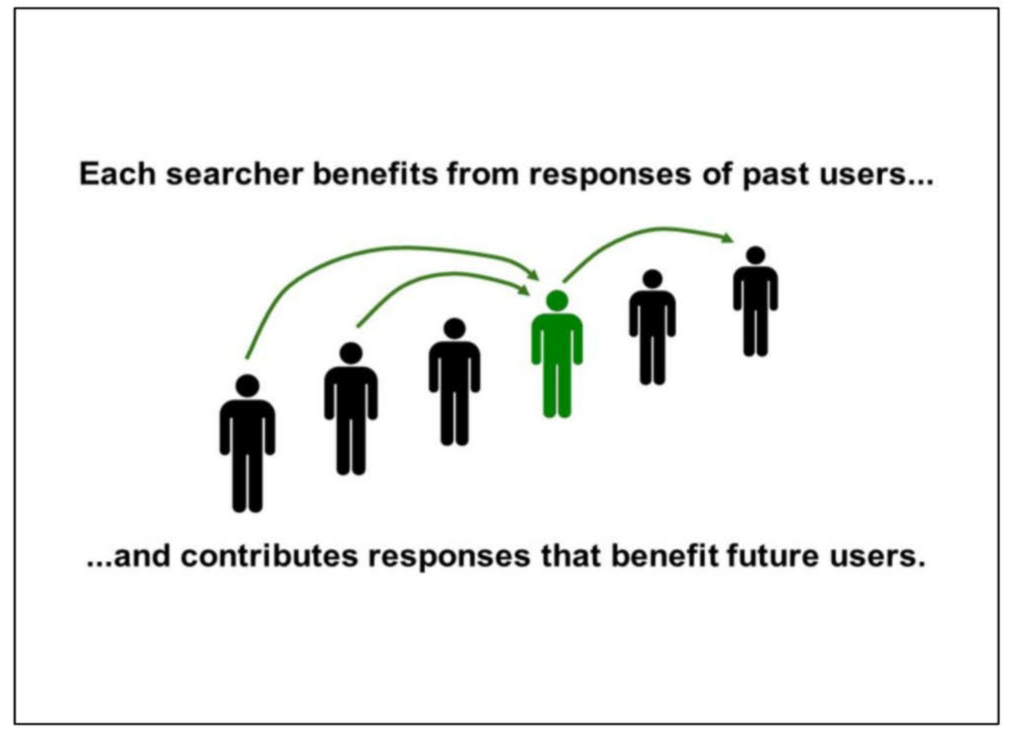

The presentation tells us that every search we do is influenced by past searches - and influences future searches.

They say, “We have to design interactions that allow us to LEARN from users,” and “sustain the illusion that we understand.”

The obvious concern with a system that relies so heavily on the actions of users is that it’s going to be swayed by user’s preference towards known brands. People click on what they recognize.

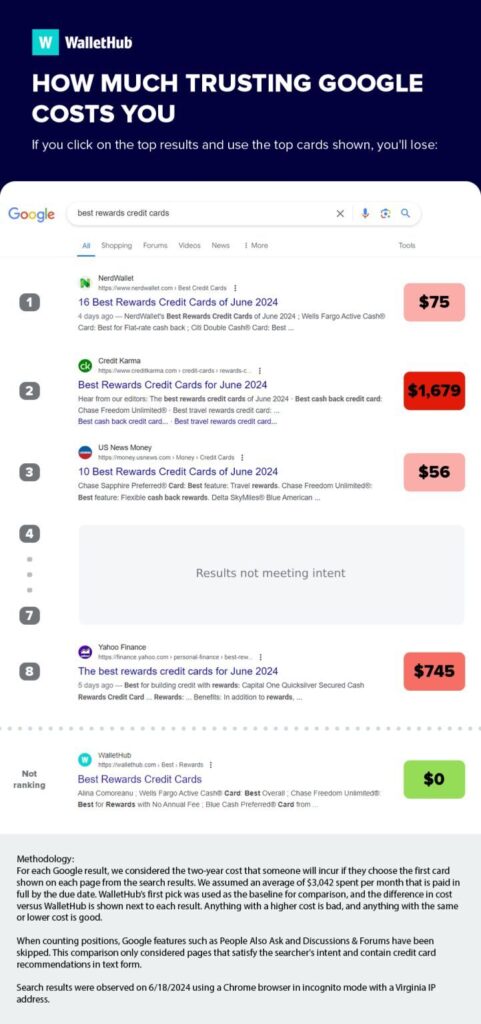

The inspiration for me to write this article came from reading this post by WalletHub. I think Google’s system of memorizing the actions of searchers without actually understanding the content itself makes the rankings for "best rewards credit cards" less accurate and helpful. I also think that this type of problem could improve soon.

WalletHub study on how much trusting Google costs you

WalletHub did a search for “best rewards credit cards” and showed that if you took the advice of the sites Google is ranking, the cards they are recommending clearly are not in your best interest! The numbers on this infographic represent how much it would cost you after two years of regular use, as compared to the card WalletHub recommends.

Let’s walk through these search results like a searcher. I think there’s a lot we can learn here by putting ourselves in the shoes of a searcher.

In this video we look at what information gain each post offers and why a searcher might choose one result over the other. I would argue that Wallet Hub and Credit Karma meet the same intent. And given that the former has much more brand recognition, searchers are likely going to gravitate towards it. There’s little reason to rank WalletHub for this query if we believe that the actions of searchers are the primary determinant of rankings.

But for a query as important to Your Money or Your Life as credit card applications, should Google not prioritize accuracy over popularity?

I think Google will get better at knowing which content is factually correct

As we have just discovered, Google doesn’t actually know whether the content on these credit card pages is accurate. They don’t know which sites are recommending credit cards that really are the best choice and which ones are making their recommendations based on their potential affiliate commission.

For years, the PageRank metric was Google’s primary defense here. A page that comes from a brand with an authoritative and trustworthy backlink profile is much more likely to be trustworthy than one without.

Over time, E-E-A-T was developed to help improve Google’s understanding of which sites were qualified to share information on YMYL topics. In their guide to how they fight disinformation they say, “Our ranking system does not identify the intent or factual accuracy of any given piece of content. However, it is specifically designed to identify sites with high indicia of expertise, authority, and trustworthiness.”

But still, this doesn’t tell them whether content on pages is actually likely to be accurate. The system is still likely saying, “Well, lots of people click on this Credit Karma page and go on to find it helpful. And we trust the brand, so let’s show it to searchers!”

So why do I think this could change?

The last update of Google’s Quality Raters’ Guidelines gives us some hints that Google wants to do more to recognize accuracy.

The latest QRG updates hint that Google will be algorithmically assessing accuracy

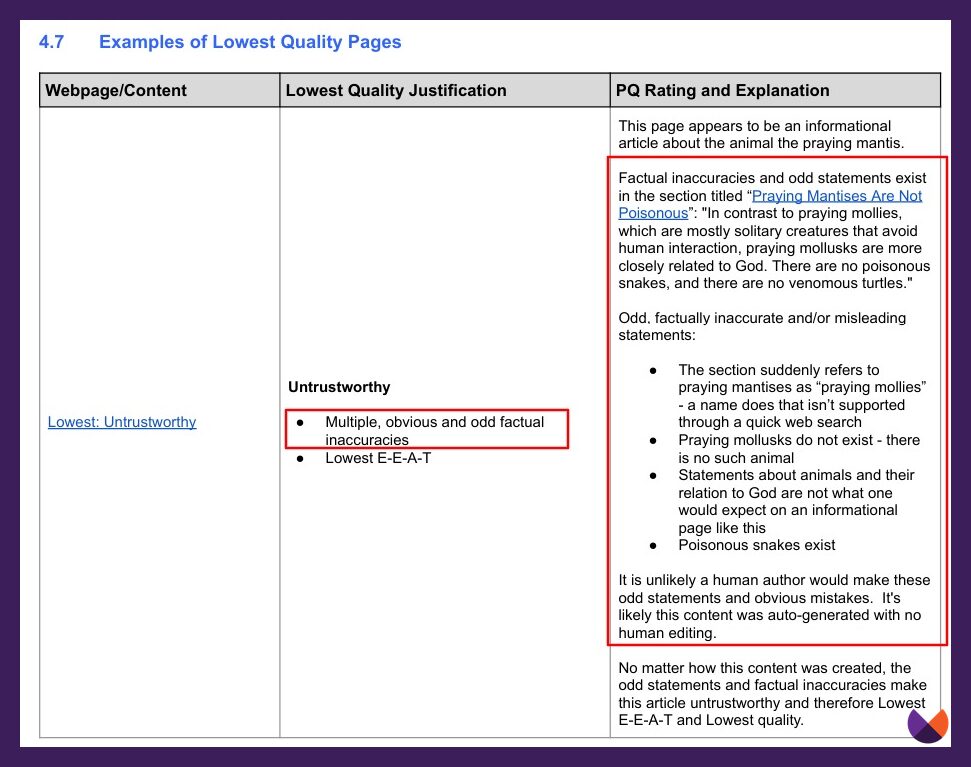

The rater guidelines are used to train Google’s quality raters what is considered quality as they rate sites. If you are taking my course, you know that the ratings of the quality raters provide Google with a score called IS - Information Satisfaction. As Google’s machine learning systems adapt and suggest new ranking weights in their calculations, the raters rate the old and new results to help Google understand whether the suggested changes are likely to improve search. With the 2023 update of the QRG, we saw that the raters are now being asked to put more emphasis on understanding accuracy.

This was added to the most recent version in section 4.5 on Untrustworthy Webpages or Websites: “Multiple or significant factual inaccuracies on an informational page which would cause users to lose trust in the webpage as a reliable source of information.”

Several examples were added to help the raters. This one suggests that the content is inaccurate because it was autogenerated without human editing.

Now, assessing accuracy is not something new to the raters.

They have been instructed for years now to recognize whether a topic is YMYL and if so to address whether inaccurate information could cause harm:

- “Could even minor inaccuracies cause harm?”

- “You may notice factual inaccuracies that lower your assessment of Trust.”

- “For informational pages and pages on YMYL topics, accuracy and consistency with well established expert consensus is important.”

- “Accuracy : For informational pages, consider the extent to which the content is factually accurate. For pages on YMYL topics, consider the extent to which the content is accurate and consistent with well-established expert consensus.”

- “There is an especially high standard for accuracy on clear YMYL topics or other topics where inaccurate information can cause harm.”

- “Mild inaccuracies on informational pages are evidence of Low quality.”

However, how is a quality rater supposed to know whether a page recommending credit cards has good accurate information? That’s a tough one if you are lacking knowledge in this area!

I do believe that we will get to the point where Google’s systems can identify not only which content a searcher is likely to find helpful, but which is most representative of the accurate truth.

In fact, I think that is the main purpose for Search - to use the wisdom of the world to determine what is likely to be true! Google is certainly not completely there yet. The machine learning systems are still learning.

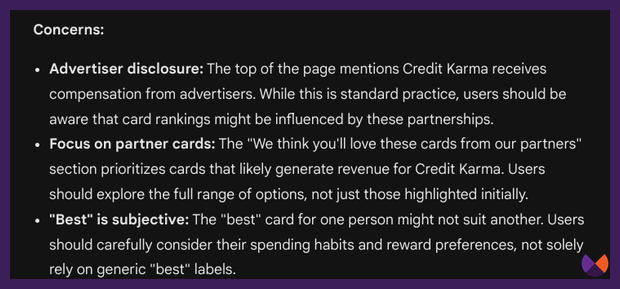

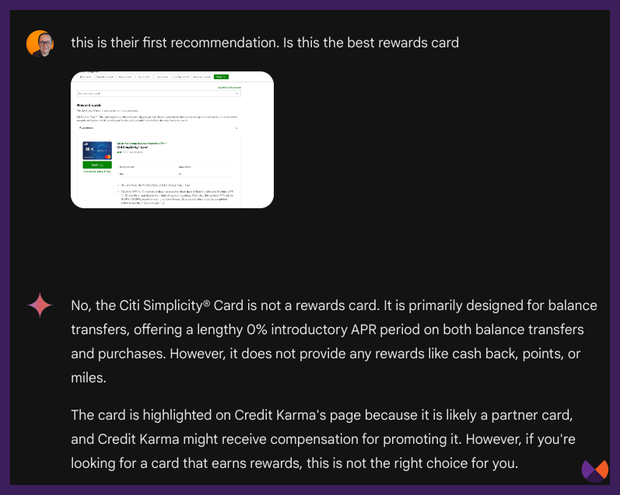

Our current AI technologies can certainly do a lot when it comes to understanding which content is accurate. For example, I put the content of the Credit Karma page into Gemini and I asked, ”Review this content on best reviews credit cards. What are the strengths and weaknesses of the information provided? Are there any areas where the information could be misleading or inaccurate?”

Gemini was able to determine that there was a clear bias towards partner cards, and also that the first card listed was not actually a rewards card.

It’s not a stretch to imagine that Google can develop ways of assessing content that give preference to content that is most likely to be accurate and representative of the truth.

The limiting factor here is the computational power involved in assessing every piece of content in Google's index.

As I’ll share soon in an upcoming blog post, I believe Google’s computational power improved tremendously in the last year with new AI hardware breakthroughs, machine learning architecture breakthroughs and the ability to assess information in machine learning systems with massive context windows. Google told us the March core update marked an evolution in how they determine the helpfulness of content. I think we are currently in the midst of seeing this evolution unfold, with significant change to come as the machine learning systems continue to learn.

I do think the day will come where Google can include content accuracy in their decisions on what to show searchers. We are not there yet though.

Some ideas for WalletHub

Let’s end this on a helpful note! I have worked with WalletHub in the past, so they know that my recommendations are to provide information that is original, insightful and helpful. This is no easy task when competing for competitive terms against well known brands.

I would argue that if we removed WalletHub’s pages from these SERPs, what remains on other sites would satisfy the user’s intent. For a searcher who is likely to be satisfied by a list from a brand known for credit card recommendations, there’s little clear and obvious information to be gained by having both Credit Karma and WalletHub ranking for these phrases. I suspect that Google's systems have determined that when they include more pages that are lists of card recommendations, users tend to only engage with one or two.

You may notice that the rest of the results ranking below the SERP features shown are meeting different user intent - like articles to help people make decisions and other forms of content.

I’d love to see WalletHub experiment with different types of content on this page that a searcher would be happy to engage with even if they have already read what’s ranking ahead of them.

Here’s some homework for you. CTRL-F through the rater guidelines and look at all of the places Google mentions “original.”

Original doesn't just mean the content is unique. It needs to offer something uniquely valuable to searchers that searchers find helpful enough to consistently choose. Imagine Search as a Library. You ask the Librarian, "Can you give me some books to help me decide which rewards card to buy?" The Librarian says, "OK, here's one from Nerdwallet with a list of card recommendations. And here's a similar list from Credit Karma - they're known for recommending this kind of thing...and if you want information to help you choose, here's this news article...", and so on.

(You can do exercises like this for your SERPs with my Put Yourself in the Shoes of a Searcher workbook.)

You could argue that WalletHub does have original content because they have a proprietary score, more accurate recommendations and even more information on the page than NerdWallet does. However, what matters is the information that the searcher perceives they will gain in the few seconds that spend upon landing on a page. I do not think there's enough here to grab a searcher's attention so that they'd reply to the Librarian, "Ah yes! This book from WalletHub is the one I was looking for."

Here's more on the information gain patent I talk about in the video.

WalletHub's page offers a very similar experience to what Google is already ranking with NerdWallet and CreditKarma. In an era where the actions of searchers play such a huge role in rankings, it’s going to be really hard to outrank better known brands while producing similar pages to what they offer.

While it’s possible that my theory comes to fruition and Google starts to recognize which pages are more likely to be factually accurate and unbiased, then perhaps WalletHub will improve. But, I think that the key to improving is to figure out how to consistently get users to:

- click on their result when shown it in the search results

- be satisfied with this result more than the other options shown to them

I’d love to see WalletHub experiment with wildly different offerings such as:

- A video with credit card experts sharing their thoughts on recent offerings and trends.

- A quiz or tool to help people decide which card is best for them. (Try Claude Sonnet to make a quiz or interactive tool!)

- More research that helps people make decisions. But it needs to be displayed in a way that people are willing to engage with though.

- Displaying options in a different format somehow. I’d experiment with smaller tables, charts, and other graphics.

- Doing more to understand and meet the intent of a searcher. If I am researching rewards cards, I don’t just want a list of cards, but really I want to quickly know what I’m going to pay and what rewards I can get and what the risks are. (I think the Credit Karma explanations do a better job at quickly answering these questions. Wallethub gave me a lot of text to read that was harder to quickly skim.)

- Making it more simple to skim the page and decide which card you want.

Ultimately though, it gets harder and harder to “SEO” a page like this to the top unless you can convince people that you are the result that they wanted to see.

I hope you’ve found this helpful!

Marie

p.s. I'll be doing more site review type of videos. Here's more information on how you can have me make a video looking at your site from the perspective of a searcher, and here's my workbook if you'd like to work through these exercises yourself.

Comments are closed.