Google sent out a lot of notifications recently telling people they are blocking their CSS and Javascript.

Did you receive a notification from Google in the last few days telling you that you are blocking CSS and JavaScript? I've had a lot of people ask me about these so I thought I'd try to explain these in layman's terms for those of you who may be confused and not sure what to do.

What are CSS and JavaScript?

CSS is the code that contains the stylesheets for your website. It dictates things such as what fonts should be used on the page and the size of different page elements. JavaScript files are often files that do cool things on a website. For example, if you have a slider on your website, that's probably controlled by JavaScript. JavaScript can also be used to process form submissions and other tasks as well.

Why would I have CSS and JavaScript blocked and why should I now unblock it?

In the past, search engines did not read JavaScript. Anything that was in JavaScript would be completely ignored. The general consensus was that there was no point in having Google crawl through these files as it would just waste resources. However, Google is getting better and better at understanding JavaScript. Just over a year ago, Google released a blog post advising website owners that they should not be blocking Google from seeing these files.

Similarly, in the past CSS was rarely important for understanding the content on the page. But now, you can do some pretty amazing things with CSS. In some cases, if Google can't see the CSS on the page, it may mean that they are unable to see some important parts of the page's content.

I personally believe that Google's ability to interpret javascript is an integral part of how Google is learning to determine which sites are high quality sites that users like. Ultimately Google's goal is to show the most useful sites at the top of the search results. Now that they can see javascript they can determine that, for example, users are often submitting a form on your site, or engaging with your mortgage calculator or taking some other type of action on the site that previously they couldn't see. I can't prove that user engagement like this is a ranking factor, but really, the more that Google can see that proves that people like to use your site, the better.

As such, it is important to not block Google from seeing anything that is an important part of the content of your site. Doing so could negatively impact your rankings.

How do I know if I am blocking CSS and Javascript?

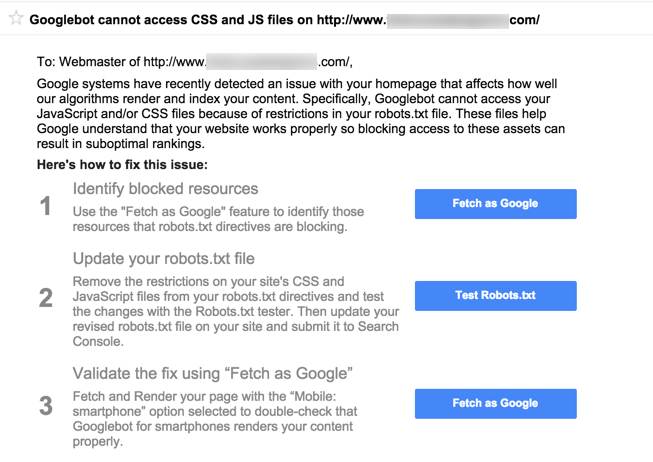

There are a couple of ways to tell whether you are blocking these files. First, to see if you have the message that Google sent out, go to Google Search Console (formerly Webmaster Tools) and click on Messages. Look for something that looks like this:

![]()

Here is the full text of the message:

Next, take a look at your robots.txt file. To do this, type the following into the url bar:

www.yourdomain.com/robots.txt

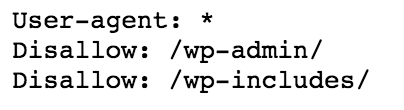

If you are running a site on WordPress, you may see something that looks like this:

That line that is disallowing /wp-includes/ is blocking Google from seeing your JavaScript as usually this is where JavaScript files are stored for a WordPress site.

So far, all of the messages that I have seen this week have been for WordPress sites. If your site is *not* WordPress, and you are not sure what files you are blocking improperly, it's probably best to speak to your developer or to an experienced SEO to determine what the best course of action is.

How do I fix the problem?

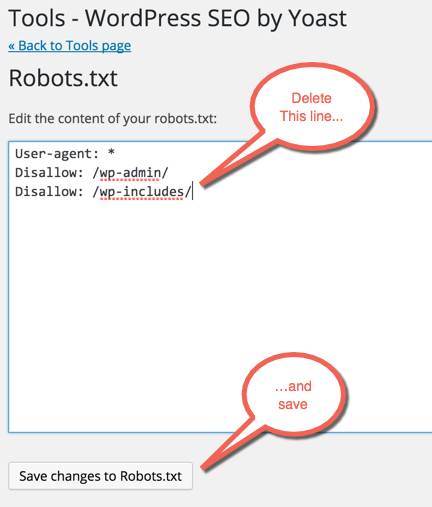

The solution is to remove the /wp-includes/ block from your robots.txt. If you are not comfortable with doing this, it is probably best to have someone with experience do this work for you as it is possible to do some serious damage to your search engine rankings if you do the wrong thing with your robots.txt file.

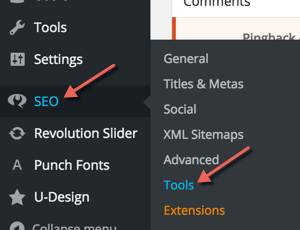

Still, for most sites on WordPress, the fix is pretty simple. If you have the Yoast SEO plugin installed, this will allow you to edit your robots.txt file. If you don't have it installed, you can safely install the plugin just for this purpose. Then, in your left sidebar, click on SEO and then Tools:

Now, select File Editor and robots.txt. Then you can remove the line that blocks wp-includes and save the file:

If for some reason you want to block search engines, but allow just Google to see your javascript, Jen Slegg from the SEMPost has some good information on how to do that.

How to tell if you've fixed the problem

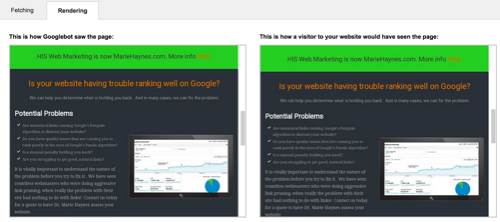

The next step is to go to Google Search Console and run a fetch and render as Googlebot. To do so, in the left column, select Crawl and then Fetch As Google. Leave the submit form blank to have Google check your homepage and click "Fetch and Render". It will take a minute or so to get results. You're probably going to see a green checkmark and "Partial":

![]()

Why partial? In most cases there are still going to be resources that Google is blocked from seeing. These will occur if you are using external resources. For example, you may see that your Adsense code is blocked. That's ok. I've seen a few people asking on Twitter if it's ok if Google fonts are blocked. This really shouldn't be an issue in my opinion. If, however, you use external resources that are vital to rendering content on your page, then these should not be blocked.

Ultimately, the best test is to click on the part where it says "Partial" and compare the two pictures you get. The one on the left is how Googlebot sees the page and the one on the right is how users see the page:

Scroll down to see your entire page. Are the two pictures the same? Are there parts of the page that Googlebot is not seeing? If it's just ads that are not being seen by Googlebot, that's ok. But, if parts of your page such as images, forms, etc. are not being seen then there still may be a problem.

This morning someone asked in the Webmaster Help Forums about why Googlebot was still showing issues on fetch and render. Google employee had an interesting response saying that it is perfectly ok for you to be blocking javascript if that javascript is not a critical element of the homepage:

What about security concerns?

Some people have their javascript blocked for security reasons. I am not a security expert, so it is difficult for me to comment fully on this. Gary Illyes from Google commented today saying that even though Google wants to crawl your CSS and JavaScript they do not include these files in Google's index:

@Danny_Boardman we have an exhaustive list of file types we index. Javascript and CSS is not one of them 🙂 https://t.co/MU291c3J4A

— Gary Illyes (@methode) July 28, 2015

Also, it's still a good idea to keep the /wp-admin/ block in robots.txt. This will stop search engines from indexing your login page.

If someone who understands the potential security issues that come along with having Google crawl CSS and JavaScript files, please leave a comment below.

Have Questions?

Any time Google sends out a crazy number of messages, there tends to be a lot of confusion. While an SEO professional likely knows what these messages mean, I am guessing that most small business owners who got this message were a little confused as to what to do. Hopefully this has helped, but if you have further questions, please ask them in the comments section and I'll see if I can help.

Comments

Hi Marie,

Thanks for this guide its been a big help. I received the email from google and upon checking it was a twitter feed plug-in and google maps plug-in that were blocked, also wordfence security which they have now updated to solve the problem.

My question is do we need to notify google that we have made the changes as I’m still getting the message in WMT saying “Googlebot cannot access CSS and JS files on http://www.lowisphotography.co.uk“.

The only disallow I have in the robots tester is the wp login.

Many thanks

Cris

Hi Cris,

Thanks for chiming in. In this case, I probably wouldn’t worry about those resources being blocked by Google. If you run fetch and render and everything looks good on the Google view of the page other than a blank spot for the twitter feed and Google map, this is totally ok IMO.

The message will always remain in Webmaster Tools. It’s not like a manual action that goes away once you have fixed the problem and file for reconsideration. Unfortunately, I don’t believe that you can get any further clarification from Google on the issue other than running fetch as Googlebot.

It sounds like you’ve done all you need to do.

Good post with user useful info for the most part. The only discrepancy is that wp-includes contains mostly WordPress core .js and that wouldn’t affect how Google views front-facing pages too much. The Java that would mostly affect how Googlebot renders the page is located in the content directory in the “themes” and “plugins” folders. Most of the time with WordPress sites, the only folder that you should prevent Googlebot from seeing is wp-admin. Unless there is good reason not to, it’s usually best to give Google access to as much of public_html as possible. It might be beneficial to your users to emphasize that wp-includes is OK to block 99% of the time unless the site has non-WordPress customizations that drop .js files into the includes folder for whatever reason.

Files & directories can also be blocked from external access by the webserver. Some CMS, like WP and Drupal, restrict directory permissions for security reasons. Also, some modules/plugins place JS and CSS in module folders which are not externally accessible.